Data-Centric AI Development with Synthetic Data

Andrew Ng, Stanford Professor and founder of Google Brain, defined what Data-Centric AI means to him. He wrote on deeplearning.ai: “Data-Centric AI is the practice of systematically engineering the data used to build AI systems.” – with the goal of iteratively improving their performance.

Similar to how agile development processes and clean code best practices shaped our modern software development methodology, this data-centric methodology is shaping how we develop AI models going forward.

The basic flow of the development of AI models starts with an initial network that isn’t yet performing at the level that we want. We want to gather data, train the network, evaluate it, and deploy it to production.

A model-centric approach is to use the same data and iterate on the model hyper-parameters, the model architecture, even the augmentations. Due to the lack of available data this, in many cases, was the default path.

The data-centric approach is the opposite. Each iteration should improve existing data or integrate new data, then train and evaluate the network. The main idea is to iteratively improve the model by iteratively improving the data itself.

Creating and improving this data iteration loop is the key to success.

Read our ebook: Designing a Synthetic Data Solution

Data-centric AI Development with Synthetic Data

AI Performance is impacted in large by two main factors, “the Model” (which, in my opinion, includes the architecture, training procedure, and hyperparameters) and “the Data” used for training.

“The Model”, part has converged for the most part. There is still progress in academia and big tech companies, but for practical applications, this has converged and is abundantly available.

The Data is where we need to focus in order to make a large impact.

How can you leverage synthetic data with a data-centric AI approach to provide the data strategy and development methodology infrastructure needed to develop AI applications?

The answer is synthetic data. Synthetic data can have a major impact both on the quality of algorithms and speed that teams are able to bring to their projects from initial phases to production. It can provide access to controllable generators that create high-variance labeled data and to allow the computer vision engineer to iteratively engineer the data to optimize the model performance.

Simulated Synthetic Data

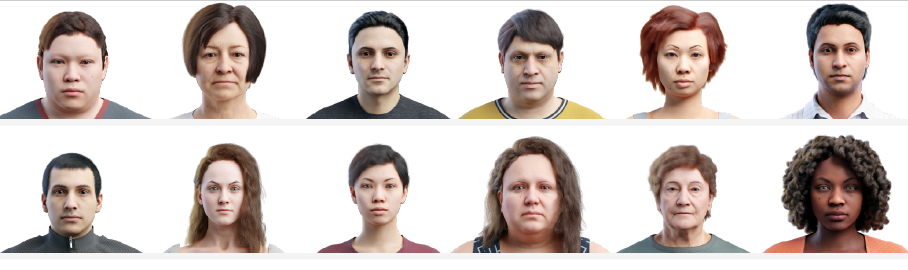

The type of synthetic data that Datagen sees as a game-changer is Simulated Synthetic Data. This approach represents the target visual domain within a high-variance, controllable 3D simulation.

Simulated synthetic data starts with real-world 3D base content. For example, we capture many types of high-quality data from the real world, including super high-quality human scans, Hollywood level motion capture, 3D scans of items and architectural layouts.

The concept is to create controllable high-variance simulations using controllable generators that create large amounts of unique and coherent 3D dynamic scenes based on user input.

Lastly, High-Fidelity Sensor Simulation is key. In the simulated environments, users can place virtual cameras and sensors that mimic the real ones. By leveraging high-quality camera and noise simulations and physics-based rendering, the visual data captured should look as if it was captured in the real world.

By capturing visual data from within the simulated worlds we can teach our AI models to understand the real world.