The State of Facial Recognition Today

This is the first of a two part series on the state of facial recognition and its challenges.

Officials across the world, from Moscow to San Francisco, use facial recognition for security and surveillance purposes. Roughly a third of the Chinese population uses facial-recognition payment. Phone owners across the globe are getting used to unlocking their phones with their faces.

There’s no denying that facial recognition is getting more ubiquitous. The facial recognition market size is projected to reach USD 13.8 billion by 2028, according to a report. These are thanks to the rising demand for mobile phones, wearable services, IOT devices, and cloud-based technology.

In This Article

How Facial Recognition Works

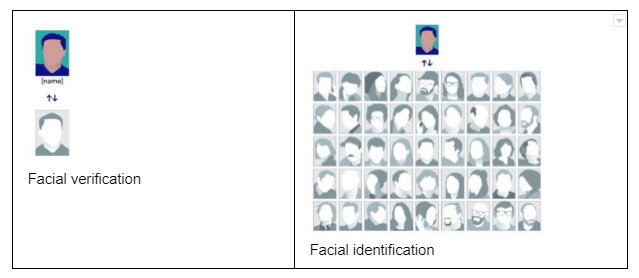

We can divide facial recognition into two types of tasks–verification or identification.

Facial verification is the process of determining if someone is who they declare themselves to be. Such a process is called one-to-one matching (1:1). For example, when a user attempts to unlock their phone with their face, the algorithm matches the face of the user against a stored image of the owner. If the similarity score is high enough, then the user’s identity is verified and the phone unlocked. Other applications include boarding of an airplane and authorizing payments.

On the other hand, facial identification compares an unknown face against a database of images. This type of matching is called one-to-many (1:N). For example, facial identification softwares can check a face captured by surveillance cameras against a database containing faces of wanted criminals.

Comparing facial verification (left) to facial identification (right) (Source)

NIST’s Face Recognition Vendor Test (FRVT) evaluates the performance of state-of-the-art facial recognition algorithms. In this article, we highlight the best performers and the most exciting developments in the realm of facial recognition.

Get started with our free trial!

NIST as the Independent Evaluator of Facial Recognition Algorithms

How well do these algorithms perform? That is a question that the National Institute of Standards and Technology (NIST) aims to answer. NIST is the world’s leading evaluator of facial recognition algorithms for both verification and identification tasks.

Vendors of facial recognition technologies worldwide submit their algorithms to NIST’s Face Recognition Vendor Test (FRVT). NIST then evaluates all the algorithms against the same benchmark (containing test images that the vendors have never seen). The FRVT is the de facto benchmark for facial recognition technology and is viewed with high regard.

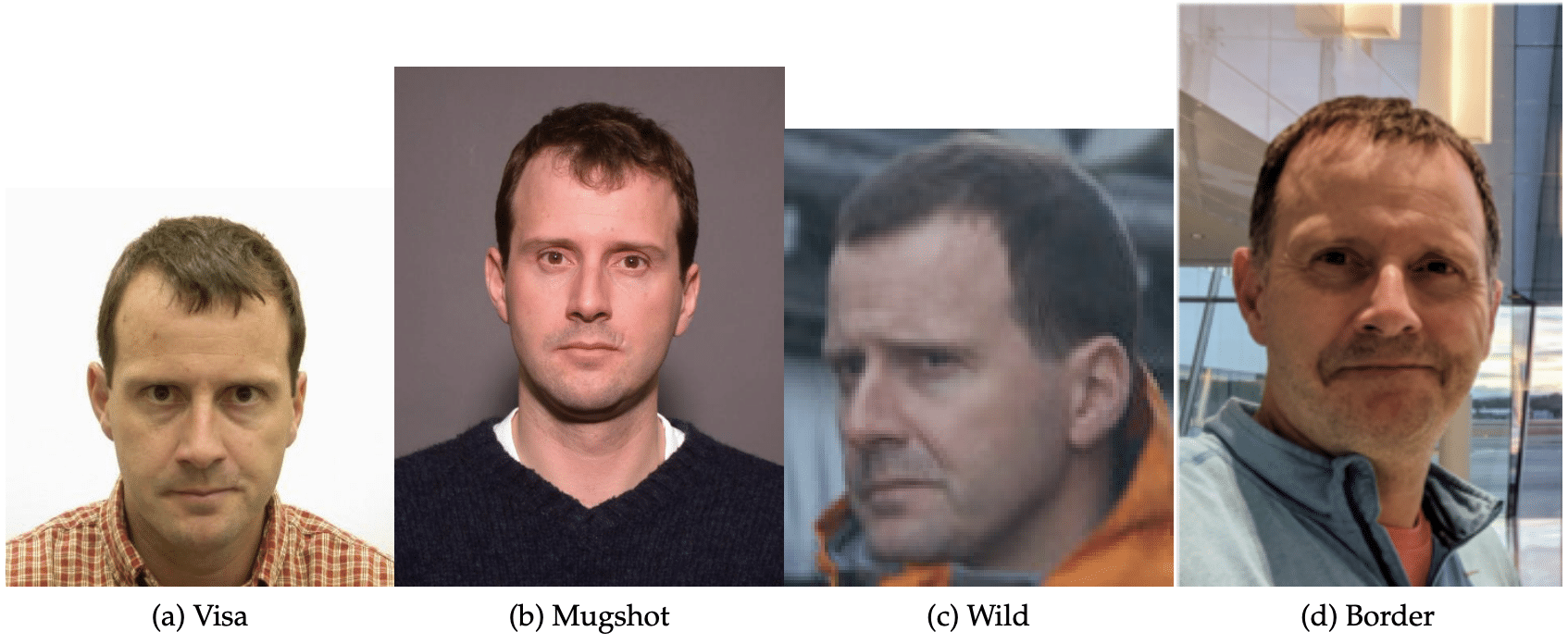

The NIST FRVT 1:1 is a test of facial verification. It tests each algorithm against different types of photos–application photos, kiosk photos, mugshots, visa photos, border-crossing photos, and in-the-wild photos. The size of each category is of the magnitude of 100,000 to 1,000,000. The performance of each algorithm is then characterized with false positive and false negative rates. The false positive rate, or also known as the false match rate (FMR), is the rate at which a biometric process mismatches faces from two distinct individuals as coming from the same individual. The false negative rate, or also known as the false non-match rate (FNMR), is the rate at which the process fails to match biometric signals of the same individual.

Examples of the different type of images (Source)

On the other hand, the FRVT 1:N benchmark is a test of facial identification. It uses six datasets (frontal mugshots, profile view mugshots, desktop webcam images, visa-like immigration application photos, immigration lane photos, and registered traveler kiosk photos). Again, the developers do not have access to this data.

Impressive Performance of Algorithms at NIST FRVT

NIST’s FRVT is a testament that face recognition algorithms have improved tremendously over the past decade.

The accuracy of the best algorithm beat the previous record in the 1:N mugshot category with 1.6 million identities and 12 million images. In particular, when we allow 3 false positives for every 1000 identification attempts, the 2020 frontrunner Sensetime had an impressive false negative identification rate (FNIR) of 0.0024, which bests the reigning record of 0.0031 set by NEC in 2018. In 2022, Sensetime further reduced their FNIR to 0.0017. To put it into context, this means that out of 10000 searches attempted, Sensetime only missed 17 times.

Sensetime is not an isolated example. There are also 28 other developers who have defeated the previous record set by NEC. This represents a broad gain of accuracy across the industry.

It doesn’t end there, though. Algorithms are getting better at addressing issues that have long plagued the facial recognition community (like differences in expressions, poses and quality). Notably, there has been progress made in the identification task of not only the constrained photos (mugshots and visa photos which have little pose invariance), but also unconstrained photos (webcam photos taken from various angles).

Example of a images taken from different poses (Source)

For example, developers like Camvi, Megvii, TongYi and Neurotechnology have improved significantly on webcam images. That is a cause for celebration. Yet, there is a gap between the performance of algorithms on constrained photos and unconstrained photos. We stated earlier that Sensetime’s FNIR on the constrained mugshot category was 0.0017. The same algorithm, when tested on unconstrained webcam images, had an FNIR of 0.0080, over 4 times that of the constrained mugshot. This highlights how unconstrained photos pose a challenge to even the best performing algorithms.

Further, NIST reported that a few algorithms demonstrated their capabilities to match side-view photos to galleries of frontal photos, with search accuracy matching the best algorithms in 2010. The ability to recognize a 90-degree change in viewpoint is a long-sought milestone in facial recognition research. Such development is certainly a cause for celebration.

In the FRVT 1:1 track (verification), CloudWalk arguably performed the best across multiple categories. Notably, it only has a false non-match rate of 0.0006 when verifying visa photos, when false match rate is fixed at 0.000001. To put it into context, it only has 6 false negatives out of 10,000 images and 1 false positive out of a million matches.

CNNs as the Future of Facial Recognition

NIST attributed the gains of accuracy to cutting-edge deep convolutional neural networks. They generally outperformed conventional appearance-based methods (like principal component analysis) and feature-based methods.

The advancements of facial recognition networks released in the past decade corroborates NIST’s claim. In CVPR 2014, Facebook released DeepFace, one of the first deep learning networks for facial recognition. The recent advent of ArcFace in 2018 allowed for the extraction of more discriminative features for face recognition. Since then, variations of ArcFace like AdaFace have dominated facial recognition leaderboards.

Simultaneously, researchers are also departing from small-scale benchmarks like Labeled Faces in the Wild to large-scale ones like MegaFace. As benchmarks become harder to beat, researchers resort to training their models with more data. Thankfully, with the release of ever-larger face datasets like DigiFace-1M, the research community has a plethora of data at their disposal.

DigiFace-1M is a synthetic dataset of 1 million faces (Source)

Overall, the improvement in model architecture and the increase in training dataset contributed to the robustness of facial recognition CNNs.

Other Interesting Developments in Facial Recognition

What we have discussed sidesteps the privacy concerns that plague facial recognition. The NIST reports also highlight the gap between the performance of the algorithms on high-resolution and low-resolution images. Rest assured, research is underway to address these challenges.

- Privacy Preserving Facial Recognition

Facial recognition technology faced backlash for violating the privacy of individuals. Privacy-preserving facial recognition might be an answer to that problem. For example, Tencent’s DuetFace does not make inferences on facial images. Instead, it uses the features extracted from the frequency domain of the facial images.

- Recognizing low resolution faces

Real-world images (like those from surveillance cameras) are often of poor quality.

Thus, researchers addressed this by a “attention similar knowledge distillation” approach. Concretely, this approach transfers the attention obtained from a high resolution (HR) network as a teacher into an LR network as a student.

Other researchers used a different approach to tackle the curse of low resolution. They designed a new loss function called the “octuplet loss”. This novel loss function leverages the relationship between high-resolution images and their synthetically down-sampled variants jointly with their identity labels.

- Ear recognition

Interestingly, University of Georgia researchers developed an effective ear recognition system, which serves the same purpose as facial recognition but is arguably less intrusive.

In the part two, we will evaluate the weaknesses of today’s facial recognition algorithm that we found from NIST FVPR.