Facial Landmarks: Use Cases, Datasets, and a Quick Tutorial

What Are Facial Landmarks?

Facial landmark detection is a computer vision task in which a model needs to predict key points representing regions or landmarks on a human’s face – eyes, nose, lips, and others. Facial landmark detection is a base task which can be used to perform other computer vision tasks, including head pose estimation, identifying gaze direction, detecting facial gestures, and swapping faces.

This is part of an extensive series of guides about machine learning.

In This Article

Common Use Cases for Facial Landmark Detection

Driver Monitoring

Driver fatigue causes many car accidents, and the emergency stopping capabilities of smart cars are not always effective. Monitoring drivers for signs of fatigue is useful in preventing accidents. For example, a computer vision model could process video feeds from a camera in the vehicle that detects signs of tiredness or distraction in the driver’s face. The model could trigger an alert if the driver doesn’t pay sufficient attention to the road.

Built-in movement tracking systems and wearable ECG tracking devices can perform a similar function, but a computer vision approach is simpler and less intrusive. Neural networks can learn to identify sleepiness in drivers’ faces using facial landmark inputs.

Alternatively, a MobileNetV2 architecture can detect driver fatigue in video streams without landmark detection, although the training time is longer. CNN-based landmark detection can help simplify dataset labels for training purposes. However, the inference speed of the neural network and the mobile device quality may affect driver monitoring systems. Thus, landmark detection algorithms offer a better solution.

Animation and Reenactments

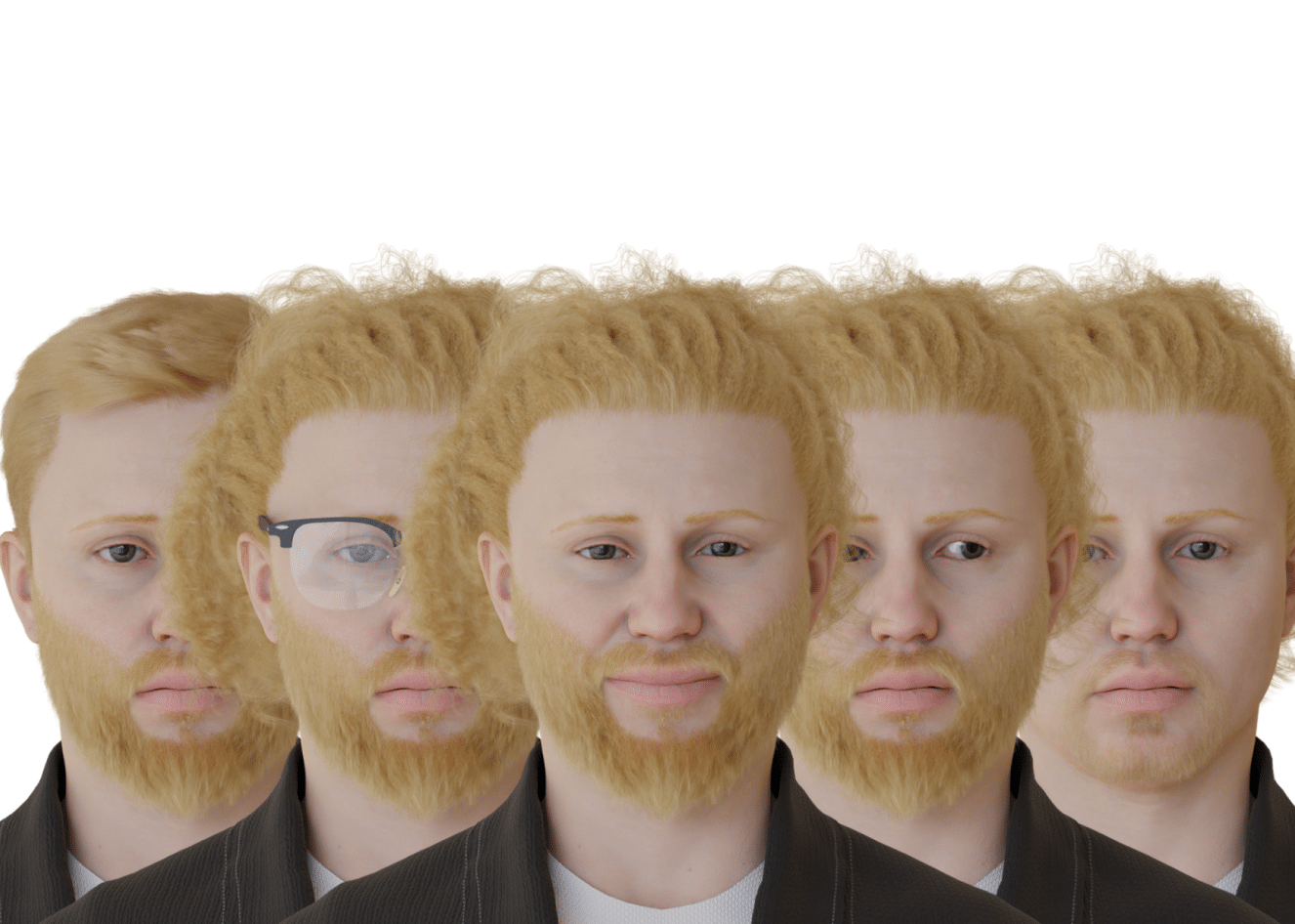

Face animation algorithms use facial landmark detection to generate animated characters based on a limited set of images with annotated facial landmarks. These algorithms can produce follow-up frames for film and gaming applications.

Another application is editing facial movements in dubbed films to match the new audio track. A facial landmark detection algorithm can replace the mouth region with an adapted 3D model.

For example, the Pix2PixHD neural network is useful for lip-syncing tasks, while the DeFA algorithm can construct an entire 3D face mesh. The Dlib library can be used to synthesize face representations using boundaries and facial landmarks. FReeNet can produce reenactments between different people using a Unified Landmark Converter module during training. The PFLD algorithm can extract facial landmarks from source and target images, and a GAN can use these to generate new images with more facial details.

Read our Benchmark on Facial Landmark Detection Using Synthetic Data

Facial Recognition

This use case involves algorithms performing face verification, recognition, and clustering tasks (grouping similar faces). The best algorithms use face preprocessing coupled with face alignment to improve facial recognition. These algorithms often use multi-task cascaded convolutional networks (MTCNN) to detect faces and localize landmarks.

Emotional expressions are detectable through lip, eye, and eyebrow movements. Facial landmark recognition can help identify emotions.

Facial Landmark Keypoint Density

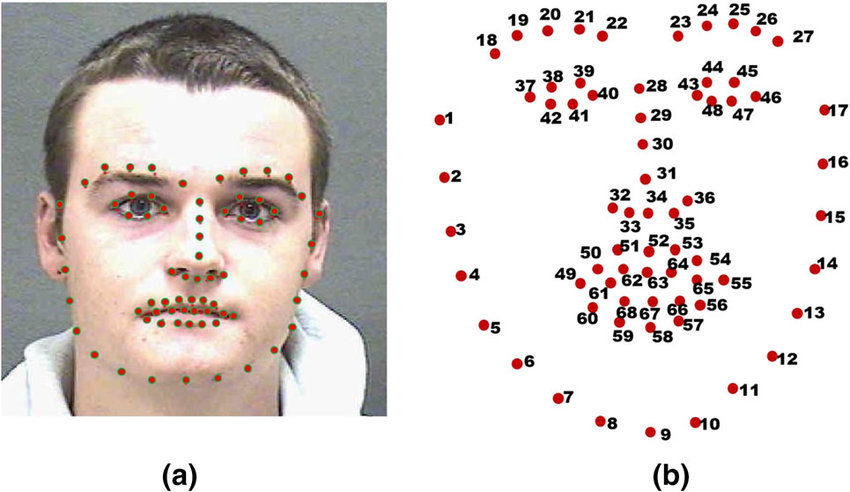

Standard facial datasets provide annotations of 68 x and y coordinates that indicate the most important points on a person’s face. Dlib is a commonly used open source library that can recognize these landmarks in an image.

Source: ResearchGate

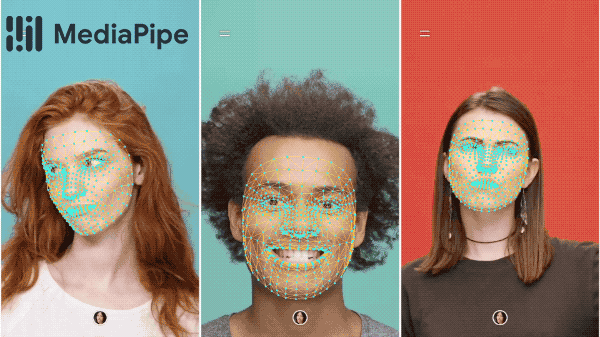

However, this system of 68 facial landmarks is limited for many purposes. Newer datasets and algorithms leverage a dense “face mesh” with over 468 3D face landmarks. This approach uses machine learning to infer the 3D facial surface from a single camera input, without a dedicated depth sensor. Google’s MediaPipe solution uses dense facial landmarks.

Source: Google

Facial Landmark Datasets

Facial landmark algorithms require datasets of pre-annotated facial landmarks they can learn from. Here are a few commonly used facial landmark datasets.

300W

300-W is a face data set consisting of 300 indoor images and 300 outdoor wild images. The images cover a variety of identities, facial expressions, lighting conditions, poses, occlusions, and face sizes.

Compared to other datasets, the 300W database has a relatively high proportion of partially occluded images and covers additional facial expressions. Images were annotated with 68 point markers using a semi-automatic method. Images from the database are carefully selected to represent challenging samples of natural face instances under unrestricted conditions.

300-VW

300 Videos in the Wild (300-VW) is a dataset for evaluating landmark tracking algorithms. It contains 114 videos containing faces, each approximately 1 minute in length at 25-30 fps. All frames are annotated with the same 68 markers used for the 300W dataset.

AFLW

Annotated Facial Landmarks in the Wild (AFLW) is a large collection of annotated facial images sourced from Flickr. The images have a wide variety of pose, facial expressions, ethnicity, age, gender, as well as diverse imaging conditions. A total of approximately 25,000 faces are annotated, with up to 21 landmarks per image.

Detecting Facial Landmarks with dlib, OpenCV, and Python

This is abbreviated from the full tutorial by Adrian Rosebrock. We’ll show how to use the landmark detector that comes with the dlib library to identify the coordinates of 68 landmarks in human faces. Identifying a dense mesh of facial landmarks, like in the Google Pipelines example above, is beyond the scope of this section.

Prerequisites

- Install dlib and its dependencies

- Download the imutils library

Step 1: Detect Faces in Your Images

Create a new Python file with the following code:

# import required libraries

from imutils import face_utils

import numpy as np

import argparse

import imutils

import dlib

import cv2

ap = argparse.ArgumentParser()

# –-image is a path to the input image

ap.add_argument(“-p”, “–shape-predictor”, required=True,

help=”path to facial landmark predictor”)

ap.add_argument(“-i”, “–image”, required=True,

help=”path to input image”)

args = vars(ap.parse_args())

# initialize built-in face detector in dlib

detector = dlib.get_frontal_face_detector()

# initialize face landmark predictor

predictor = dlib.shape_predictor(args[“shape_predictor”])

# load input image, resize it, and convert it to grayscale

image = cv2.imread(args[“image”])

image = imutils.resize(image, width=500)

gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

# detect faces in the grayscale image

rects = detector(gray, 1)

In this code we pre-process the image into a 500×500 pixel grayscale image (downsampled to allow faster processing), and run it through the built-in face detector in dlib to identify the bounding box of the face.

Step 2: Detect Facial Landmarks

The following code loops over each face detection and performs facial landmark detection:

for (i, rect) in enumerate(rects):

# predict facial landmarks in image and convert to NumPy array

shape = predictor(gray, rect)

shape = face_utils.shape_to_np(shape)

# convert to OpenCV-style bounding box

cv2.rectangle(image, (x, y), (x + w, y + h), (0, 255, 0), 2)

# show the face number and draw facial landmarks on the image

cv2.putText(image, “Face #{}”.format(i + 1), (x – 10, y – 10),

cv2.FONT_HERSHEY_SIMPLEX, 0.5, (0, 255, 0), 2)

for (x, y) in shape:

cv2.circle(image, (x, y), 1, (0, 0, 255), -1)

# show the resulting output image

cv2.imshow(“Output”, image)

cv2.waitKey(0)

The dlib face landmark detector returns a shape object containing 68 (x, y)-coordinates of facial landmark regions. We then use the face_utils.shape_to_np utility function to convert the output into a NumPy array, which makes it possible to easily print the discovered face landmarks on the image for visualization.

Generate synthetic data with our free trial. Start now!

See Our Additional Guides on Key Machine Learning Topics

Together with our content partners, we have authored in-depth guides on several other topics that can also be useful as you explore the world of machine learning.

Synthetic Data

Authored by Datagen

- Neural Radiance Field (NeRF): A Gentle Introduction

- Neural Rendering: A Gentle Introduction

- Simulated Data Is Synthetic Data

Computer Vision

Authored by Datagen

- Generative Adversarial Networks: the Basics and 4 Popular GAN Extensions

- ResNet: The Basics and 3 ResNet Extensions

- ResNet-50: The Basics and a Quick Tutorial

Image Datasets

Authored by Datagen