Neural Rendering: A Gentle Introduction

What Is Neural Rendering?

Neural rendering is a method, based on deep neural networks and physics engines, which can create novel images and video footage based on existing scenes. It gives the user control over scene properties such as lighting, camera parameters, poses, geometry, shapes, and semantic structures.

A successful neural rendering model produces a controllable, realistic model of a 3D scene. Neural rendering is a cutting edge technique for generating synthetic data based on existing image and video data.

In This Article

Fundamentals of Neural Rendering

Neural rendering is a classic concept that originated from computer graphics development. A neural rendering pipeline learns to represent scenes from real-world images. Its input is either an unordered set of images representing different views of the same scene, or a structured set of images of videos providing specific, known angles of the scene.

Neural rendering simulates the physics of a camera capturing the scene. An important feature of 3D neural rendering is to separate the 3D scene representation from the camera capture process. This makes it easier to reconstruct new images from the scene with a high level of 3D consistency.

Three-dimensional neural rendering typically involves an image generation model such as point jetting, rasterization, or volume integration to decouple physical processes like projection from 3D representations of a scene. The model uses physics, leveraging conditions such as lighting and the scene’s relationship with the camera. A rendering equation formulates the light transport.

Computer graphics often use different approximations of the rendering equation depending on the type of scene representation (for example, path tracing, rasterization, or volume consolidation). The 3D neural rendering system leverages the preferred rendering method. It is important to distinguish between the rendering method and the scene representation to enable training based on real images.

Rendering a Mesh

Meshes are sets of faces, edges, and vertices contextualizing the shapes of 3D objects. Faces, or polygons, are 2D surfaces surrounded by edges. Edges are straight lines running between vertices (points). Creators usually use software solutions to build 3D meshes. Models intended for complex use cases like animation require special attention because incorrectly laid out polygons may result in strange shapes and deformations.

Some 3D modeling products imitate organic processes like clay modeling, allowing artists to sculpt objects intuitively. However, these approaches may produce less accurate results if they don’t incorporate edge loops to lock vertices together and create closed polygons.

In some cases, it is necessary to manually lay out the polygons, which may be a time-consuming process. For such cases, creators can use software that offers processes for defining polygons independently of formulating the overall shape of an object. More advanced modeling processes include reality capture, which generates an accurate 3D model from an array of coordinates known as a point cloud.

Some 3D modeling frameworks use differentiable rendering with backward-forward propagations. They can render colorized meshes in the forward pass and handle complexity—the model considers various 3D properties, such as camera orientation, lighting, and geometry. The software can convert color or lighting gradients in flat images into vertices to create a 3D mesh.

A spherical harmonic is a special mathematical function on a spherical surface. Spherical harmonics are useful for solving differential equations in physics and other scientific fields. For computer graphics applications, they enable a range of important 3D rendering calculations, such as indirect or ambient lighting and non-rectilinear shape modeling.

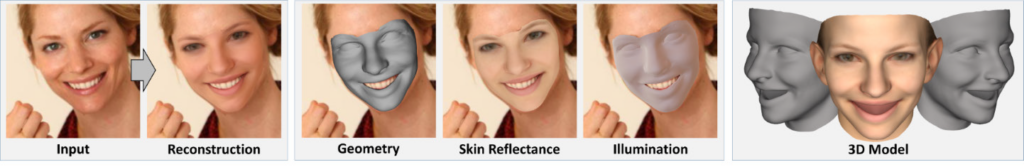

Example: Face Reconstruction (MoFA)

MoFA is a differentiable decoder with convolutional encoders to formulate images based on model analysis. The decoder can produce realistic images of faces to reconstruct semantic meaning. It renders graphics using an image generation model that enforces semantic meaning using parametric face priors. It also parameterizes attributes such as shape, pose, expression, lighting, and skin texture independently.

MoFA uses Eloss, a photometric loss, to compare synthesized images to input images. It regularizes faces based on statistical analysis, enabling fully unsupervised model training. Users can input 2D facial landmarks to enable faster loss and reconstruction—there is no need to supervise semantic parameters.

Once the model is trained, the network encoder can support regression of illumination and dense face models from a simple image without additional inputs like landmarks.

Rendering a Volume

Rendering volumes typically involves a combination of computer graphics and visualization techniques to produce 2D projections from 3D virtual models. The 3D models are data sets, such as a set of slice images from a 3D scanner (e.g., MRI, CT, etc.). For example, a rendering algorithm can arrange a collection of 2D slice images of an object to generate a 3D volume image rendering.

The user typically captures the slice images in a sequence with a regular number of pixels. Usually, the slices occur at regular intervals—for example, every millimeter. This approach generates a regular volumetric grid. The user samples the area surrounding every voxel (volume pixel) to extract the necessary data to create representative values for each volume region.

After capturing the 3D data set, users can render a 2D projection of the volume. However, they must first define the rendered volume relative to the camera’s position in space. Users must then define each voxel’s color and transparency/opacity —usually, this involves an RGBA transfer function.

Implicit representation: NeRF

The neural radiance field (NeRF) optimizes simple scene functions of continuous volumes using sparse input views to produce high-quality renderings of complex scenes. A research team at UC Berkeley queried 5D coordinates to composite the NeRF view. They used standard rendering methods to project the output density and color of the model onto images based on the coordinates and camera rays.

This approach demonstrates the usefulness of neural radiation fields for rendering realistic, novel views of complex scenes. Compared to conventional neural rendering and compositing techniques, it offers enhanced performance and can accurately recreate detailed geometries. NeRF has generated renewed interest in neural volume rendering technology because it lowers the entry barrier for non-experts, allowing them to leverage image manipulation methods easily.

Learn more in our guide to neural radiance fields (NeRF)

Voxel representation: Plenoxels

Plenoptic voxels use a sparse 3D grid instead of the multilayer perceptron (MLP) at the center of the neural radiance field (NeRF). This approach interpolates all query points from their surrounding voxels. It is possible to render 2D views without a neural network, greatly reducing the complexity and computational intensity. Plenoxels produce a visual quality comparable to NeRF but are significantly faster.

Read the paper: Yu et. al, 2022

Hybrid representation: KiloNeRF

KiloNeRF builds on the capabilities of NeRF by improving the rendering speed. NeRF has slow rendering due to the laborious process of repeatedly querying the deep MLP network (millions of times). KiloNeRF distributes the workload across thousands of smaller MLPs rather than a single large MLP. The small MLPs do not require so many queries—each MLP represents a small segment of the overall scene. This approach improves performance threefold, requires less storage, and produces renderings of high visual quality.

Read the paper: Reiser et. al, 2021

Equivariant Neural Rendering

This rendering framework enables the model to learn representations of neural scenes directly from the images without supervision. It allows users to impose 3D structures by letting the learned representation transform organically, imitating 3D scenes in the real world.

It produces a loss if the scene representation is forced to be an isovariate map. Equivariant formulas ensure the model infers and renders the scene in real time, producing comparable results to more time-consuming inference models. This approach also uses challenging datasets for neural rendering scene representation, including complex scenes with challenging background and lighting conditions. The model can produce impressive results when combining these data sets with the standard ShapeNet benchmark.

Applications of Neural Rendering

Semantic Photo Manipulation and Synthesis

Semantic photo manipulation and synthesis allow interactive editing tools to control and modify images based on semantic meaning. The texture synthesis model takes a reference image and semantic layout to create a new patch-based texture. These patch-based techniques allow users to reshuffle, retarget, and in-paint images, but they don’t support high-level functions like adding new objects or synthesizing entire images from scratch.

A data-driven graphics system can generate new images by compositing several regions from images taken from a large set of photos. Users can specify their desired scene layouts with inputs like sketches or semantic label maps. However, these methods tend to be slow because they search through large image databases. Inconsistencies within an image set can result in undesired objects in the rendered image.

Novel View Synthesis

Novel view synthesis involves generating new camera perspectives of scenes based on a limited set of perspectives in the input dataset. It synthesizes images and videos from novel positions. Major challenges for this approach include inferring the 3D characteristics of the scene based on limited input and filling in the hidden areas in the scene.

Classical image-based rendering methods rely on multiple views to reconstruct geometries and convert observations into novel views. However, the number of available observations can limit performance, often failing to render convincingly.

Neural rendering methods can produce higher-quality results by introducing machine learning and scene reconstruction capabilities. They help address view-dependent effects and can generate images from sparse inputs. However, limitations of neural rendering include use-case specificity and training data limitations.

Volumetric Performance Capture

Volumetric performance capture, or free-viewpoint video-based rendering, uses multiple cameras to learn 3D geometries and textures. The main drawback of this approach is its lack of photorealism, given the limited high-frequency details and baked-in texture. Imperfections in geometry estimates often result in blurred texture mapping. It is also challenging to make the 3D model temporally consistent.

One proposed solution combines traditional relighting techniques with high-speed depth-sensing capabilities. It uses multiple RGB cameras and active infrared sensors to map the scene’s geometry accurately. It uses different illumination conditions during capture using spherical gradients, producing photorealistic results.

However, this technique struggles to capture transparent or translucent objects or delicate structures like hair. The multi-view approach does provide a solid foundation for machine learning-based rendering methods that require extensive training data.

Relighting

Relighting refers to the photorealistic rendering of scenes under new lighting conditions. It is essential for various computer graphics use cases like visual effects, augmented reality, and compositing. Image-based relighting techniques typically use input images with varying illumination to learn how a scene might look in new illumination conditions.

The drawback of image-based methods is their expense and slow data acquisition. Proposed solutions to enable faster relighting include training deep neural networks with reflectance field data to generate novel lighting conditions based on limited inputs.

Project: Relighting4D: Neural Relightable Human from Videos

Facial Reenactment

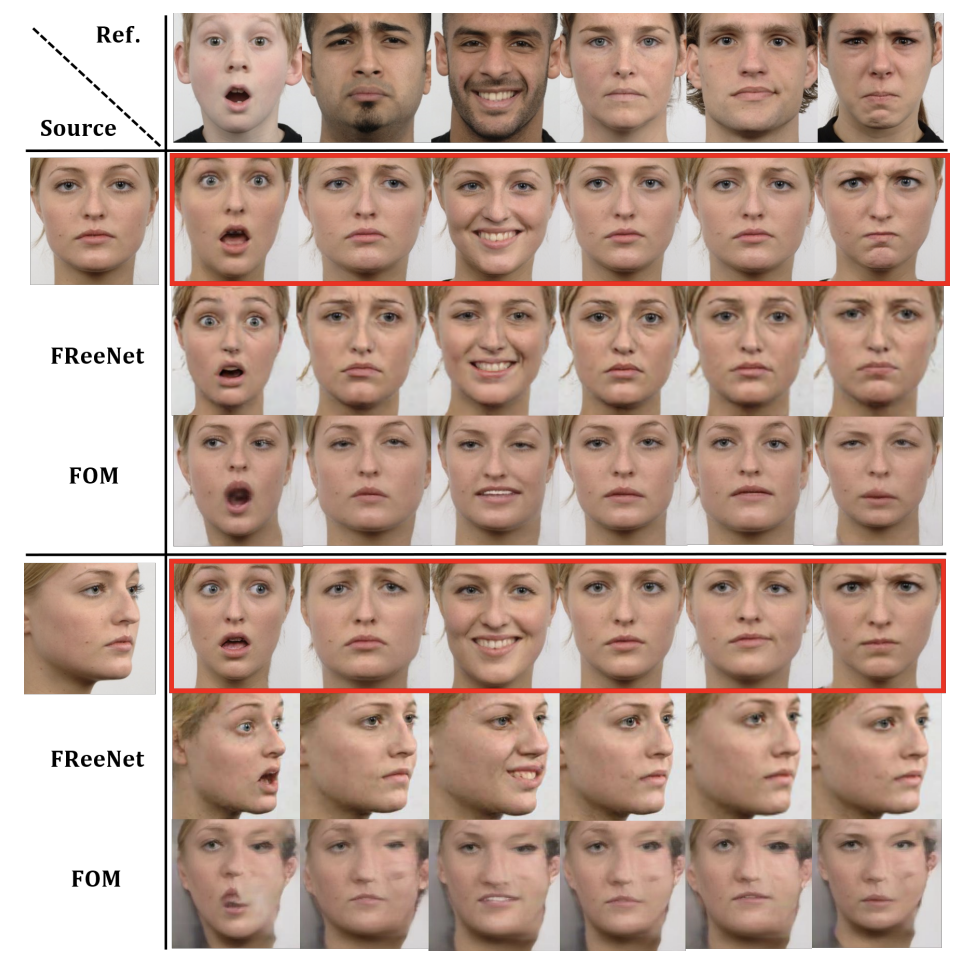

Facial reenactment modifies a scene’s properties, including the position and motion of objects (in this case, heads or facial features). Creators use facial reenactment to generate new facial expressions, speech, and head positions. Typical techniques reconstruct 3D face models from inputs, edit, and render the model to generate the synthesized images.

Neural rendering methods enhance facial reenactment by accurately tracking and reconstructing 3D heads and producing photorealistic results. Some approaches refine outputs with a conditional GAN. Neural rendering also offers more control of head poses and facial expressions than classic techniques.

Source: Dual Generator Face Reenactment

Body Reenactment

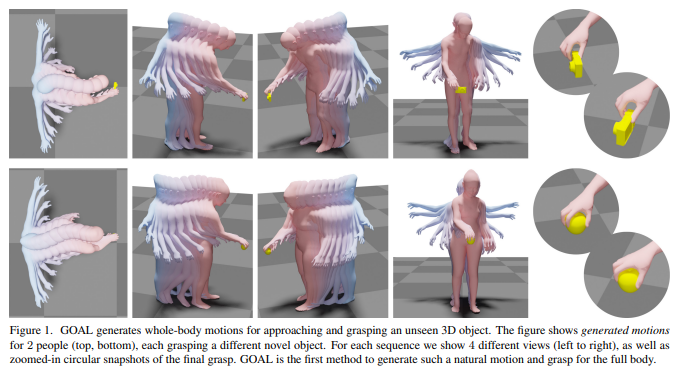

Neural rendering enables pose-guided video and image generation with greater control over the subject’s body pose within the synthesized output. Generating realistic images of a complete human body can be challenging given the large range of non-linear motions.

Body performance cloning involves transferring motion from source videos to target videos. These models are often specific to individuals and require extensive training data to work with a new person. Before receiving the training pairs, a model may reconstruct the target’s motion based on dense or sparse performance inputs. It is a supervised learning approach.

Current performance cloning approaches use image-to-image conversion networks for conditional generative mapping. They might use rendered mesh, skeleton, or joint heatmap inputs.

The textured neural avatar approach doesn’t require explicit geometry during training or testing and can map different 2D images to a shared texture map. It samples static learning textures by predicting rendered skeleton coordinates, then uses them to reconstruct arbitrary views. It can infer new viewpoints based on the subject’s desired pose.

Feeding the system new skeleton inputs helps drive the learned pipeline. It offers more consistent, robust generalization capabilities than classic image-to-image methods. However, because the training is subject-specific, it has limited flexibility, and the model struggles with new scales. Human body performance cloning is a complex task that often results in undesired artifacts.

Source: GOAL: Generating 4D Whole-Body Motion for Hand-Object Grasping