Emotion Recognition: the Basics and 6 Datasets to Get Started

What Is Emotion Recognition Technology?

Emotion recognition is a facial recognition technology that has grown increasingly sophisticated. Today, individuals and organizations use facial emotion recognition software to enable computer programs to inspect and process a person’s facial expressions. This software leverages advanced image processing, acting like a human brain to detect emotions.

It is artificial intelligence (AI) that identifies and investigates various facial expressions using auxiliary information. Some emotion recognition technologies also use voice recognition and analysis to identify the mood and tone of human speech. Visual and auditory data can provide subtle insights, which emotion detection or recognition software can leverage to identify less obvious details.

Emotion recognition technology serves several purposes, including analyzing interviews and assisting investigations. It helps authorities detect people’s emotions to uncover important insights.

This is part of a series of articles about face recognition.

In This Article

Emotion Recognition Use Cases

Emotion detection technology has reached new milestones with the increase in computing power, highly sophisticated deep learning models, and the availability of data sets on social media platforms. Large amounts of visual data in video, audio, and image formats have resulted in an enormous repository of materials to power emotion detection capabilities.

Here are some examples of emotion detection and recognition applications:

- Self-driving vehicles—futuristic car designs incorporate sensors, such as microphones and cameras, to enable monitoring and real-time analysis of conditions within and outside the vehicle. Smart cars can use facial emotion recognition to warn drivers when they feel drowsy. Emotion detection technology can capture subtle facial expressions that other facial recognition technologies cannot.

- Medical diagnostics—doctors can use speech analysis and micro-expression detections to identify symptoms of dementia and depression.

- Personalized education—educational software prototypes can respond to a child’s emotions to improve the learning experience. If the child is frustrated that the task is too difficult or too easy, the program can adjust the task to make it easier or more difficult.

- Smart retail—retailers can use computer vision technology and AI-powered emotion detection in their stores to gauge a shopper’s reaction and mood and collect demographic information.

- Virtual assistants—future versions of popular virtual assistants will be able to leverage emotion detection capabilities to improve the service. For example, the virtual assistant can modify its language and tone according to the user’s mood. For example, Apple has submitted a patent and plans to integrate this capability into Siri.

Emotion Recognition AI: Methods and Approaches

After years of scientific research, various automatic emotion recognition methods have emerged. Researchers propose and evaluate different approaches, utilizing technologies from multiple domains such as machine learning, signal processing, speech processing, and computer vision.

In the past, algorithms like Maximum Entropy, Support Vector Machine (SVM), and Naive Bayes were used for emotion recognition. Today, state of the art algorithms are based on deep learning. A few examples:

- Deep convolutional neural network (CNN) that extracts facial landmarks from data, reduces images to 48×48 pixels, and runs them through two convolution-pooling layers (Mollahosseini et al., 2016)

- CNN for detecting facial movements using the concept of Action Units (AU), with two convolutional layers followed by a max pooling layer, and two fully connected layers that predict the number of AUs found in the face image (Mohammadpour et al., 2017)

- Using three CNNs with the same architecture, each detecting a separate part of the face – eyebrow, eye, and mouth. The output is combined into an “iconic face” which is fed into another CNN to detect emotions (Yolcu et al., 2019)

Learn more about these and other techniques in the paper by Wafa et al.

Explore Synthetic Data with Our Free Trial!

6 Emotion Recognition Datasets

Here are some examples of datasets used for emotion recognition and classification projects.

Belfast Database

This database includes recordings of responses to lab-based emotional induction tasks—it contains data about self-reported emotions, intensity, sex, and task passivity or activeness. The Belfast database lets researchers compare the expressions of people from different cultures.

SEMAINE

SEMAINE is a video dataset containing audiovisual data capturing the interactions between humans and one of four personalities (happy, gloomy, angry, and pragmatic). The video clips have annotations that rate epistemic state pairs like thoughtful, interested, certain, agreement, and concentration.

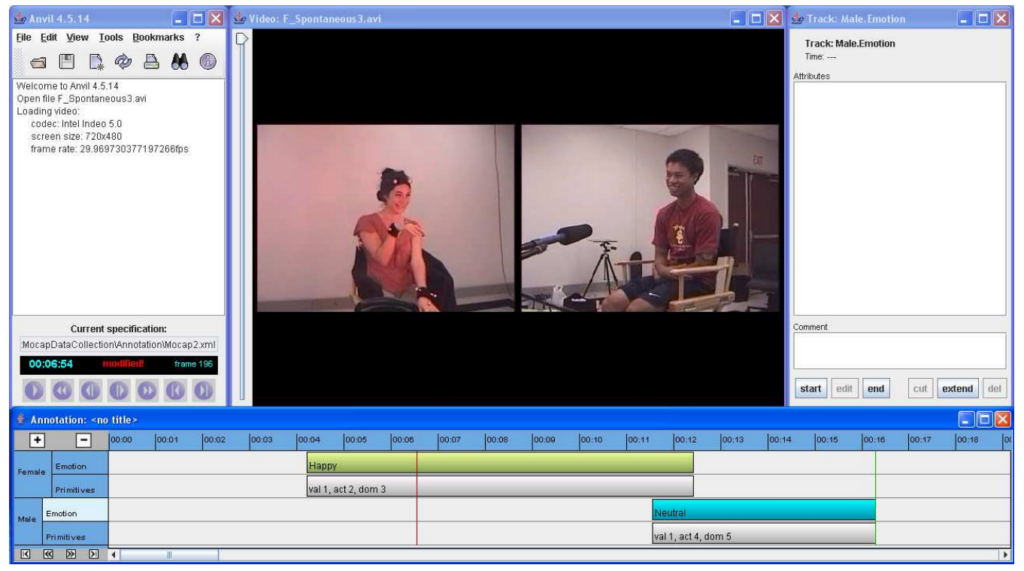

The Interactive Emotional Dyadic Motion Capture Dataset (IEMOCAP)

IEMOCAP contains 151 dialogue videos with two speakers each (302 in total). Annotations identify nine emotions—anger, fear, excitement, sadness, surprise, frustration, happiness, disappointment, and neutral. It also detects dominance and valence.

DEAP

This dataset contains EEG, ECG, and face videos with emotion annotations. It has two parts:

- Ratings from online self-assessments—volunteers rated 120 music video extracts by arousal, dominance, and valence.

- Face videos and physiological recordings—volunteers rated 40 music videos while undergoing EEGs or having their faces recorded.

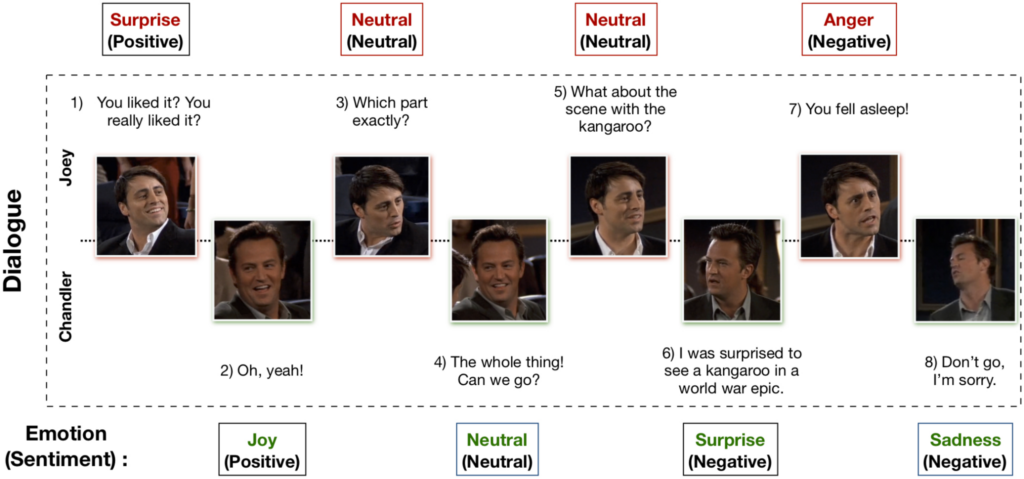

Multimodal EmotionLines Dataset (MELD)

MELD extends the EmotionLines dataset containing dialogue instances, adding visual and auditory modalities. It has over 13,000 utterances from 1,400 dialogues from the TV show Friends. Each utterance has a label classifying the emotion—anger, disgust, fear, neutral, joy, sadness, or surprise—alongside negative, positive, or neutral annotations.

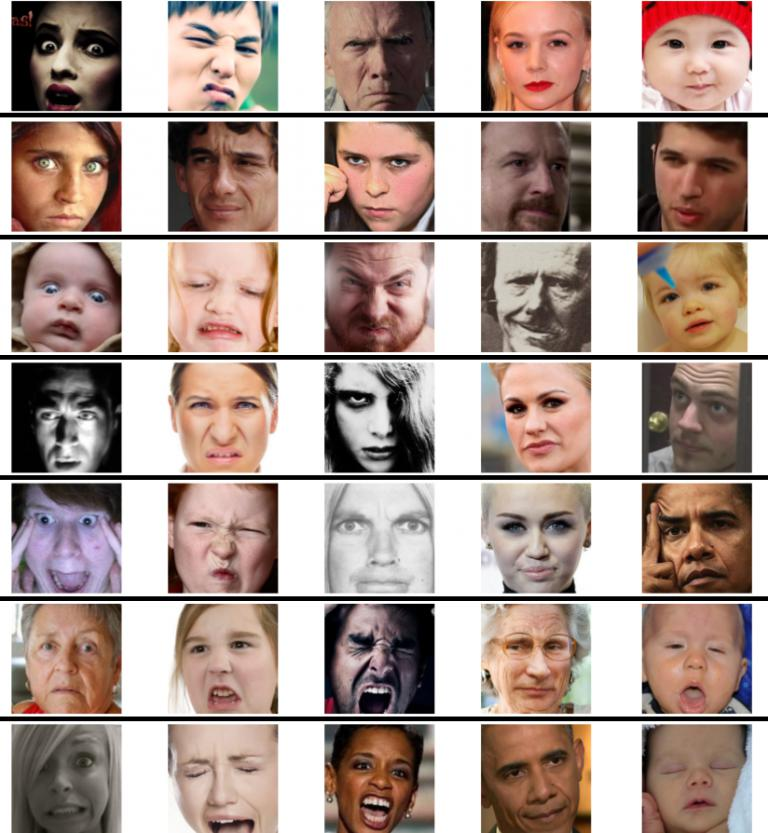

AffectNet

AffectNet is a database of facial expressions that was created to facilitate research on automatic facial expression recognition. It contains more than 1 million annotated images of facial expressions, with labels indicating the presence and intensity of various emotional states, such as happiness, sadness, anger, and fear.