Pose Estimation: Concepts, Techniques, and How to Get Started

What is Pose Estimation?

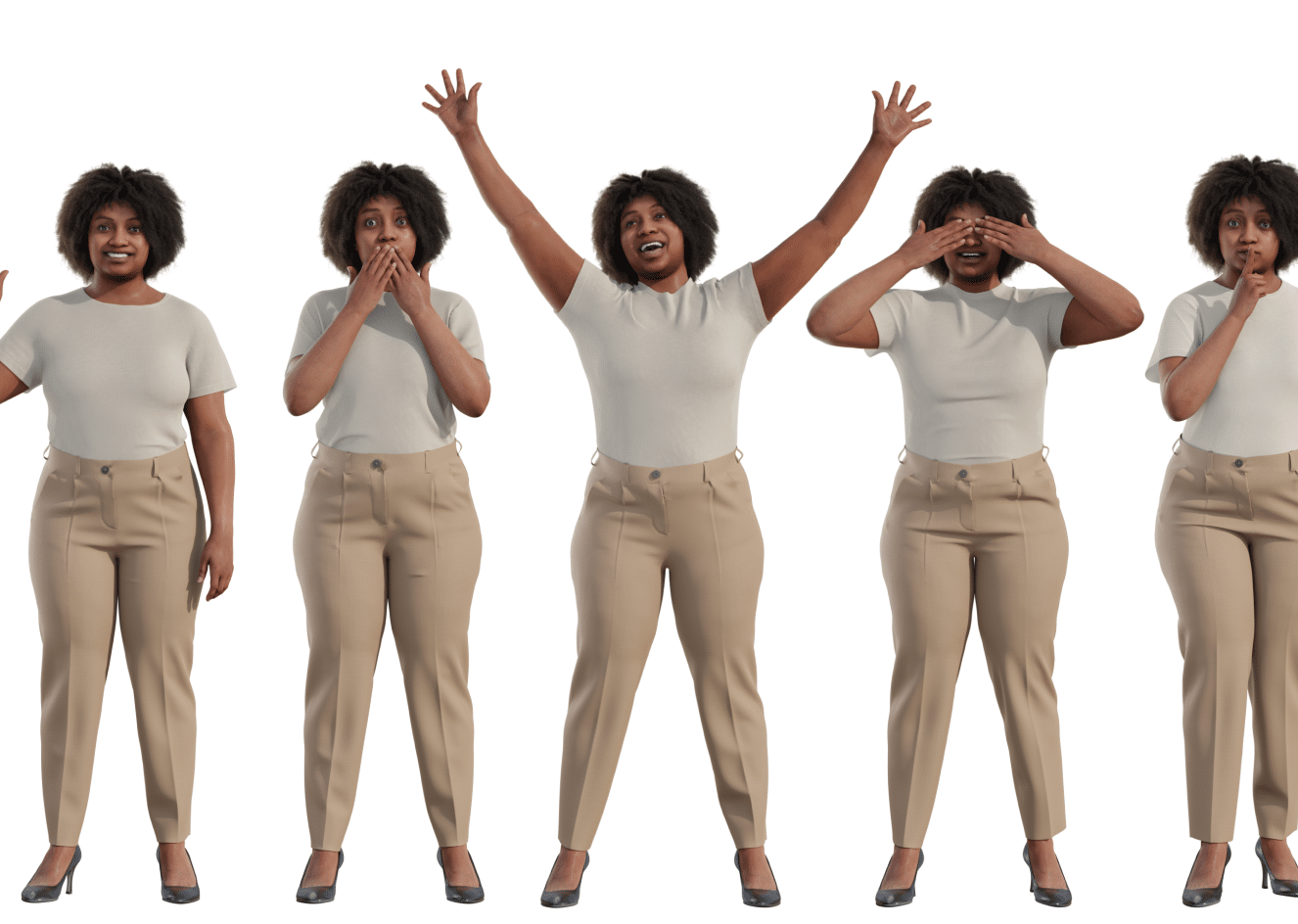

Pose estimation is a computer vision task that enables machines to detect human figures and understand their body pose in videos and images. It helps machines determine, for example, where the human knee is located in an image. Pose estimation focuses on estimating the location of key body joints and cannot recognize the individual’s identity in a video or image.

Pose estimation models can help track an object or person (including multiple people) in real-world spaces. In some cases, they have an advantage over object detection models, which can locate objects in an image but provide only coarse-grained localization with a bounding box framing the object. Pose estimation models, in comparison, predict the precise location of the key points associated with a given object.

The input of a pose estimation model is typically a processed camera image, and the output is information about key points. The detected key points are indexed by a part ID, including a confidence score of 0.0-1.0. The function of the confidence score is to indicate the probability that a key point exists in that specific position.

This is part of our series of articles about face recognition.

In This Article

Pose Estimation: Use Cases and Applications

Human Activity and Movement

Pose estimation models track and measure human movement. They can help power various applications, for example, an AI-based personal trainer. In this scenario, the trainer points a camera at an individual performing a workout, and the pose estimation model indicates whether the individual completed the exercise properly or not.

A personal trainer application powered by pose estimation makes home workout routines safer and more effective. Pose estimation models can run on mobile devices without Internet access, helping bring workouts (or other applications) to remote locations via mobile devices.

Augmented Reality Experiences

Pose estimation can help create realistic and responsive augmented reality (AR) experiences. It involves using non-variable key points to locate and track objects, such as paper sheets and musical instruments.

Rigid pose estimation can determine an object’s primary key points and then track these key points as they move across real-world spaces. This technique enables overlaying a digital AR object on the real object the system is tracking.

Animation & Gaming

Pose estimation can potentially help streamline and automate character animation. It requires applying deep learning to pose estimation and real-time motion capture to eliminate the need for markers or specialized suits for character animation.

Pose estimation based on deep learning can also help automate capturing animations for immersive video game experiences. Microsoft’s Kinect depth camera popularized this type of experience.

Types of Human Pose Estimation

2D Human Pose Estimation

2D human pose estimation involves using visual input, such as images and videos, to predict the spatial location or 2D position of human body key points. Traditionally, 2D human pose estimation uses hand-crafted feature extraction techniques for individual body parts.

In the past, computer vision obtained global pose structures by describing the human body as a stick figure. Fortunately, modern deep learning approaches significantly improve 2D human pose estimation performance for single-person as well as multi-person pose estimation.

3D Human Pose Estimation

3D human pose estimation predicts the locations of human joints in 3D spaces. It works on monocular images or videos, and helps provide 3D structure information on the human body. It can power various applications, including 3D animation, 3D action prediction, and virtual and augmented reality.

3D pose estimation can use multiple viewpoints and additional sensors, such as IMU and LiDAR, and work in conjunction with information fusion techniques. However, 3D human pose estimation faces a major challenge. Obtaining accurate image annotation is time-consuming, while manual labeling is expensive and not practical. Computation efficiency, model generalization, and robustness to occlusion also pose significant challenges.

3D Human Body Modeling

Human pose estimation uses the location of human body parts to build a representation of the human body from visual input data. For example, it can build a body skeleton pose to represent the human body.

Human body modeling represents key points and features extracted from visual input data. It helps infer and describe human body poses and render 3D or 2D poses. It often involves using an N-joints rigid kinematic model that represents the human body as an entity with limbs and joints, including body shape information and kinematic body structure.

What is Multi-Person Pose Estimation?

Multi-person pose estimation poses a significant challenge because it requires analyzing a diverse environment. The complexity arises because the number and location of individuals in an image are unknown. Here are two approaches to help solve this problem:

- The top-down approach—involves incorporating a person detector first, estimating the location of body parts, and finally calculating a pose for each person.

- The bottom-up approach—involves detecting all parts of each person within an image and then associating or grouping the parts that belong to each individual.

The top-down approach is generally easier to implement because implementing a person detector is simpler than implementing associating or grouping algorithms. However, it is not easy to judge which approach can provide better performance. Overall performance depends on which works better—the associating or grouping algorithms or the person detector.

Read our Benchmark on Facial Landmark Detection Using Synthetic Data

Top Down vs. Bottom Up Pose Estimation

Top Down Approach

Top-down pose estimation works by first identifying candidates for humans in the image (known as a human detector), and then within the bounding box of every detected human, analyzing the segment to predict human joints. For example, an algorithm that can be used as the human detector.

There are several drawbacks of the top-down approach:

- Accuracy highly depends on human detection results—the pose estimator is typically very sensitive to the human bounding boxes detected in the image.

- Time to run the algorithm increases proportionally to the number of people detected in the image, making it time-consuming to run.

Bottom Up Approach

Bottom-up pose estimation first detects all the human joints in an image, and then assembles them into a pose for each individual person. Researchers have proposed several ways to achieve this. For example:

- The DeepCut algorithm by Pishchulin et al. identifies candidate joints, then assigns them to individual persons using integer linear programming (ILP). However, this is an NP-hard problem which is time consuming to process.

- The DeeperCut algorithm by Insafudinov et al. uses improved joint detectors and pairwise scores for each image. This improves performance but still takes a few minutes to run per image.

- Cao et al. proposed a method for predicting a heatmap of human keypoints, and uses part affinity fields (PAFs) to identify persons in the image—PAF is a set of flow fields that encodes unstructured relationships between body parts. The algorithm then groups candidate joints for each person using a greedy algorithm, which can achieve real-time performance. The downside of this approach is that it can be inaccurate in grouping parts of a pose belonging to each person in the image.

- Miaopeng Li et al. proposed a new method that combines the top-down and bottom-up approaches. They use a feed-forward network for bottom-up joint recognition, and then evaluate the obtained poses using bounding boxes from a top-down algorithm. In testing, the algorithm was able to predict conference maps, connection relationships and bounding boxes accurately for all persons, with better performance compared to both bottom-up and top-down algorithms.