Gaze Estimation: Common Applications, ML Models, and Datasets

What Is Gaze Estimation?

Gaze estimation is the process of determining where a person is looking based on their eye movements and facial features. It is a technique that is used in a variety of applications, including human-computer interaction, virtual and augmented reality, psychology, and neuroscience research.

Gaze estimation can be performed using a variety of different approaches, including computer vision algorithms, machine learning techniques, and specialized hardware such as eye tracking cameras. It typically involves analyzing the position and movement of the eyes and other facial features to estimate the direction of the person’s gaze.

This is part of a series of articles about face recognition.

In This Article

How Can Eye Gaze Tracking Be Used?

You can implement eye gaze tracking technology for applications including entertainment, market research, medical diagnosis and treatment, and assistive disability technologies.

Market Research

Eye gaze tracking technology enables brands to assess customer attention to their marketing messages and evaluate product performance, package design, and the overall customer experience.

For example, implementing eye tracking for in-store testing provides brands with information about in-store navigation, customer search behavior, and purchasing choices. Brands can also integrate eye gaze tracking with retail analytics to improve customer service and business processes.

Automotive Industry

Eye gaze tracking technology helps assess drivers’ visual attention to navigation and dashboard layout. Researchers using eye-tracking glasses can assess various aspects, including where drivers look when facing obstacles on the street, how talking on the phone affects driving behavior, and how speeding compromises visual attention.

For example, recent studies researched eye gaze-controlled interaction with a head-up display (HUD) that can eliminate eyes-off-road distraction. Eye tracking can also help monitor drivers’ cognitive load to identify potential distractions.

Aviation

Eye tracking supports flight safety by comparing scan paths and fixation duration to assess the progress of pilot trainees. It helps estimate pilots’ skills and analyze the crew’s joint attention and shared situational awareness.

Eye tracking technology can interact with helmet-mounted display systems and multi-functional displays in military aircraft. Studies were conducted to assess the utility of an eye tracker for Head-up target acquisition and head-up target locking in helmet mounted display systems (HMDS). Feedback from pilots implies that while the technology is promising, its software and hardware components have not matured enough.

Another study investigated interactions with multi-functional displays in a simulator environment. The study showed eye tracking technology could significantly improve response times and the perceived cognitive load over existing systems. Additionally, the study investigated using fixation and pupillary responses measurements to assess pilots’ cognitive load to improve the design of next-generation adaptive cockpits. Eye tracking can also help detect pilot fatigue.

Explore Synthetic Data with Our Free Trial! Click here.

Gaze Estimation Models and Algorithms

Feature-Based Gaze Estimation

Feature-based methods use specific features of the human eye, including cornea reflection and pupil contour, to estimate gaze. Compared to other facial features, these are less sensitive to changes in lighting and viewpoint. Still, traditional feature-based gaze estimation methods were not reliable for gaze detection outdoors or when ambient light is strong.

A solution to this problem is to combine head pose and eye location information. One such vector is the PC-EC vector proposed by Sesma (2012). Another approach, known as behavior-informed validation, uses supervised learning algorithms, which learn eye gaze via user interaction cues provided by human testers.

Model-Based Gaze Estimation

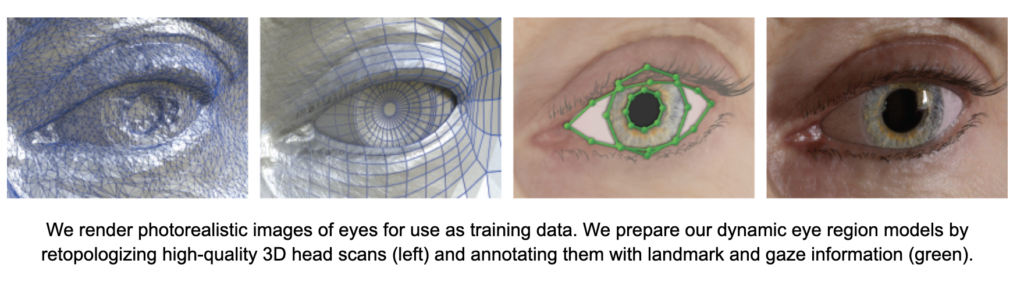

Model-based methods attempt to fit a 2D model of an iris ellipse, or a 3D model of the entire eyeball, to a specific input model. 3D approaches are more accurate but might require high resolution eye images or calibration for individual users.

Most 3D model-based gaze estimation approaches are based on the center and radius of the eyeball and the offset between the eye’s visual and optical axes. The eyeball center might be determined by facial landmarks, such as the tip of the nose, or deformable eye region models.

3D models rely on metrics from camera calibration and a global geometric model representing light sources, camera position, and orientation.

Cross-Ratio-Based Gaze Estimation

Cross-ratio methods use several infrared (IR) or near-infrared light sources, and their reflection in the cornea, to detect gaze. For example, IR light-emitting diodes (LED) can be placed on the corners of a computer monitor, highlighting the center of the user’s pupil and creating a polygon. These methods use learning-based methods to simultaneously reduce errors from spatial variance and head pose.

Cross-ratio gaze detection makes it possible to identify the center of the pupil without calculating geometric relations of the eye and camera position, and often without requiring calibration.

Appearance-Based Gaze Estimation

Appearance-based methods directly analyze an eye image and attempt to determine the point of gaze. They are more effective than other approaches when only low-resolution eye images are available.

Modern appearance-based gaze estimation relies on convolutional neural networks (CNN). For example, VGG16, AlexNet, ResNet18 and ResNet 50 have been used to achieve gaze estimation in the wild without dependence on person and head pose. Other approaches use multi-modal training, combining elements like head pose, full-face images, and face-grid data. For a full review of deep learning appearance based methods, see Cheng et. al (2021).

Related content: Read our guide to facial recognition algorithm

Datasets for Gaze Estimation

MPIIGaze

The MPIIGaze dataset contains 213,659 images of 15 individuals collected while using their laptops. It is highly variable in terms of illumination and appearance. It uses multimodal CNNs to enable real-world gaze estimation based on appearance.

Source: Max Planck Institute

GazeCapture

GazeCapture is an eye-tracking dataset collected from the public, featuring 2.5 million images of 1474 individuals. Participants captured images of their eyes on mobile devices using an iOS application. It includes subjects of varying ages, ethnicity, and appearance.

Source: MIT

The Provo Corpus

The Provo Corpus is a collection of eye-tracking data and predictability norms. It provides cloze scores (an estimate of the predictability of each word) to estimate word predictability, making it useful for researching predictive reading processes.

Gaze-in-the-Wild

The Gaze-in-the-Wild (GW) dataset lets you study the interaction between ocular and vestibular systems in a real-world setting, allowing for head movements. This naturalistic, multimodal dataset includes head and eye movements captured by mobile eye trackers. It includes head and eye rotational velocities, infrared scene images, and eye images.

SynthesEyes

SynthesEyes is a collection of synthesized eye data for training eye shape recognition and gaze estimation models. It contains 11,382 photo-realistic eye images, available in ten region-specific directories. The training images are fully labeled.

Source: University of Cambridge

VQA-MHUG

VQA-MHUG is a multimodal human gaze dataset that contains images of 49 participants and eye-tracking data captured while answering visual questions. It combines gaze data with text-based questions, enabling the study of human attention. It offers a way to improve VQA (visual question answering) models by focusing on text.