Gesture Recognition: Use Cases, Technologies, and Datasets

In This Article

What Is Gesture Recognition?

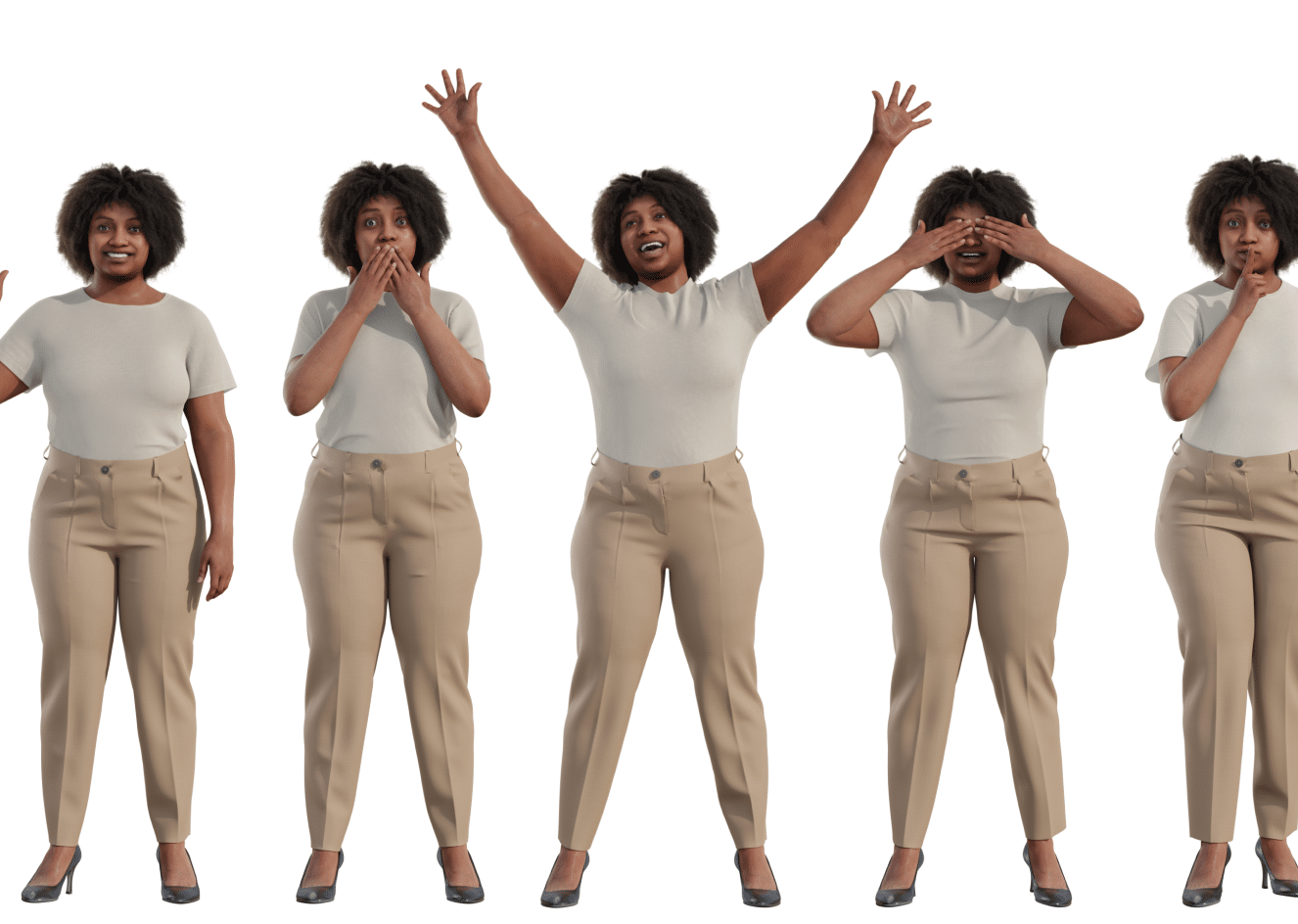

Gesture recognition is the ability of a computer or device to detect and interpret human gestures as input. Such gestures include hand movements and even finger-written symbols. The technology behind gesture recognition involves using cameras or other sensors to capture the gestures, and then using machine learning algorithms to analyze and interpret the captured data.

Gesture recognition can be used in a variety of applications. It can be used in entertainment settings such as video games and virtual reality. Gesture recognition also provides novel ways of interacting with interfaces, such as controlling a presentation or playing music by gesturing at a device.

This is part of a series of articles about body segmentation.

What Are the Use Cases of Gesture Recognition?

Gesture recognition can be used to control devices or interfaces, such as a computer or a smartphone, through movements or actions, such as hand or body movements, facial expressions or even voice commands.

Gesture recognition has a variety of uses, including:

- Human-computer interaction: Gesture recognition can be used to control computers, smartphones, and other devices through gestures, such as swiping, tapping, and pinching.

- Gaming: Gesture recognition can be used to control characters and objects in video games, making the gaming experience more immersive and interactive.

- Virtual and augmented reality: Gesture recognition can be used to interact with virtual and augmented reality environments, allowing users to control and manipulate objects in those environments.

- Robotics: Gesture recognition can be used to control robots, allowing them to perform tasks based on the user’s gestures.

- Sign language recognition: Gesture recognition can be used to recognize and translate sign language into spoken or written language, helping people who are deaf or hard of hearing communicate with others.

- Automotive: Gesture recognition can be used in cars to control various functions such as radio, AC, and navigation systems.

- Healthcare: Gesture recognition can be used in rehabilitation of patients with physical disabilities.

Gesture Recognition and Detection Technologies

Sensor-Based Hand Gesture Recognition

A sensor-based gesture recognition algorithm uses sensors to capture and analyze human gestures. There are various types of sensors that can be used for this purpose, such as cameras, infrared sensors, and accelerometers. These sensors capture data about the movement and position of a person’s body or limbs, and the algorithm then uses this data to recognize specific gestures.

Vision-Based Hand Gesture Recognition

A vision-based gesture recognition system is a type of gesture recognition system that uses cameras or other visual sensors to capture and interpret gestures. The cameras capture images or video of the user’s gestures, and the system uses computer vision and machine learning techniques to analyze the images or video and identify the gestures.

A vision-based gesture recognition system typically includes several key components: the visual sensor, which captures the images or video; a pre-processing module, which prepares the data for analysis; a feature extraction module, which extracts relevant information from the data; a classification module, which uses machine learning algorithms to identify the gestures; and a post-processing module, which takes the output of the classification module and maps it to a specific action or command.

7 Gesture Recognition and Detection Datasets

EgoGesture

EgoGesture is a large-scale dataset for vision-based, egocentric hand gesture recognition, which contains over 2,000 videos and almost 3 million frames of hand gestures collected from 50 participants. The data were collected using a head-mounted camera worn by the participants, and the images were annotated with the type of hand gesture being performed. The dataset is designed to train and evaluate various vision-based hand gesture recognition models, including both single-frame and temporal models.

NVGesture

NVGesture is useful for touchless driving control projects. It includes over 1500 dynamic gestures across 25 categories, including over 1,000 training samples (the rest are for testing). The dataset has videos recorded in three modalities: RGB, infrared, and depth.

IPN Hand

This dataset, created by Gibran Benitez, contains over 4,000 instances of hand gestures and more than 800,000 frames collected from 50 participants, captured by a depth sensor. Each image is labeled with the corresponding hand gesture, and the dataset includes a total of 13 different gestures for touchless screen interaction. The dataset is publicly available.

HaGRID

The HAnd Gesture Recognition Image Dataset (HaGRID) contains over 500,000 full HD images across 18 gesture classes. It also includes over 120K images without a gesture class. Most of the data is for training (92%), with the rest for testing.

This large dataset contains images of over 30,000 individuals in different scenes, aged between 18 and 65 years. Most of the images were captured indoors under varying lighting conditions, including extreme contrasts, such as a subject in front of a window.

VIVA

Vision for Intelligent Vehicles and Applications is a multimodal gesture dataset intended to provide challenges for machine learning models. Its settings introduce difficult conditions, such as busy backgrounds, volatile lighting, and occlusion. The goal is to enable autonomous driving programs to identify human positions and gestures in naturalistic settings.

The over 800 videos were captured using Kinect, a Microsoft device, showing eight participants performing 19 dynamic gestures inside a car.

OREBA (Objectively Recognizing Eating Behavior and Associated Intake)

Objectively Recognizing Eating Behavior and Associated Intake (OREBA) is a dataset for automatic intake gesture detection, which is used in automatic diet monitoring. The data was captured using multiple sensors, including video cameras and inertial measurement units (IMUs).

The OREBA dataset provides multi-sensor recordings of group intake events, allowing researchers to build detection models for food intake gestures. It includes two scenarios with over 100 individuals for individual and shared dishes.

MlGesture

The MlGesture dataset is used for gesture recognition tasks containing more than 1300 videos of hand gestures performed by 24 individuals. It features nine unique hand gestures, captured in a vehicle using five types of sensors from two viewpoints. One cluster of sensors with five cameras was mounted on the dashboard in front of the driver, while another cluster of sensors was mounted on the car’s ceiling, looking down at the driver.