Highlights from ECCV 2022, Part I

The European Conference on Computer Vision (ECCV) 2022 did not disappoint. The 17th rendition of ECCV was jam packed with discoveries in the different facets of computer vision, ranging from generative adversarial networks (GANS) to visual transformers, from object detection to scene reconstruction.

From the treasure trove of papers, we handpicked six exciting papers from ECCV for this blog post. Here are the first three:

1. DepthGAN: 3D-Aware Indoor Scene Synthesis with Depth Priors

Indoor scene modeling is a challenging feat. Due to the high variability of room layout and objects inside the room, existing GAN-based approaches could not adequately generate indoor scenes with high fidelity.

The authors of the paper 3D-Aware Indoor Scene Synthesis with Depth Priors recognized that the problem is due to the lack of intrinsic structure between the scenes. They argued that 2D images are not sufficient to guide the model with 3D geometry, and thus proposed the use of depth as a 3D prior into 2D GANs.

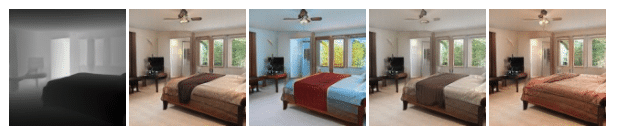

Figure 1: DepthGAN can generate high-fidelity indoor scenes (bottom row) with the corresponding depth (top row) (Source)

To that end, the authors redesigned the objective functions of the generator and the discriminator of a GAN. Specifically, they propose a dual-path generator, where one path is programmed to produce the image, while another generates the depth. Also, the authors propose the discriminator to distinguish between not only real and synthesized samples, but also predict the depth of an input image. In this way, the discriminator learns the spatial layout and guides the generator to learn an appropriate depth condition.

The approach, dubbed DepthGAN, achieves outstanding results on benchmarks. In particular, the performance of DepthGAN was compared with state-of-the-art methods like HoloGAN, GRAF, GIRAFFE, and pi-GAN on the LSUN bedroom and kitchen datasets. Unlike existing methods, DepthGAN could generate photorealistic images with reasonable geometrics. It was also found that the use of the dual-path generator results in the disentanglement of the geometry (Figure 2) and the appearance (Figure 3) of the rooms generated.

Figure 2. Conditioned on the same appearance style, DepthGAN synthesizes rooms of different geometries. (Source)

Figure 3. Conditioned on the same depth image, DepthGAN can synthesize rooms of different appearances. (Source)

2. Make-A-Scene: Scene-Based Text-to-Image Generation with Human Priors

The capability of generating images from text has grown significantly with recent advancements in large-scale models. Unfortunately, such methods often lack controllability and result in images of low quality and resolution. In particular, many existing models only take in texts as the only input, which makes controlling certain aspects of the image (like structure, form, or arrangement) difficult.

Start Generating Synthetic Data with Our Free Trial. Try Today!

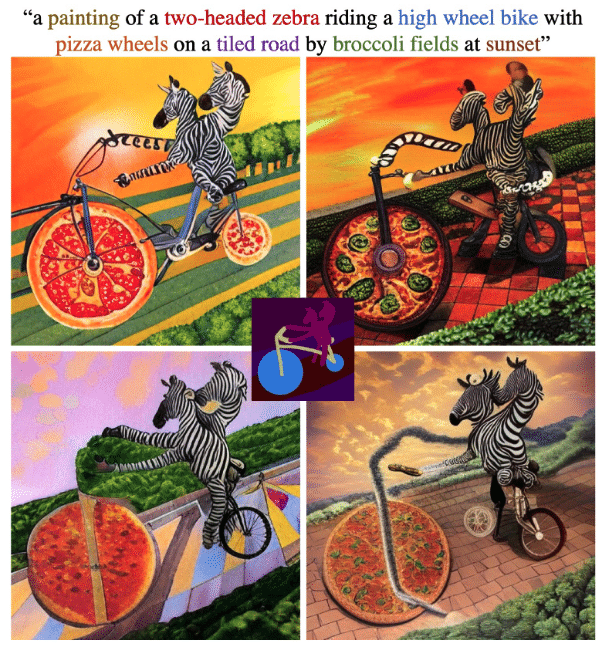

Figure 4. Samples of generated images from Make-A-Scene, given a text prompt (top) and a scene input (middle image)

Thus, the authors of the paper, Make-a-Scene introduced a novel method to address these gaps while attaining state-of-the-art results (Figure 4). In this method, the authors introduce a new type of control, a scene layout, that is complementary to text. By conditioning over the scene layout, the method produces images of higher structural consistency and quality. Moreover, the authors also improved the tokenization process by emphasizing aspects that are more important to humans, like faces. The result is that Make-A-Scene can generate high-fidelity and high-quality images with a resolution of 512×512 pixels.

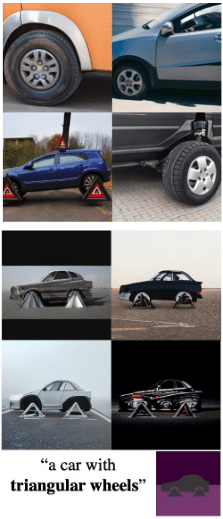

Further, Make-A-Scene is also able to overcome bizarre, out-of-distribution text prompts that previous methods like GLIDE could not. By introducing a simple sketch as an input, the model can generate unusual objects and scenarios (Figure 5).

Figure 5. When provided with a sketch of a car with triangular wheels (bottom right), Make-A-Scene successfully generated the unusual car, unlike GLIDE which failed to do so. (Source)

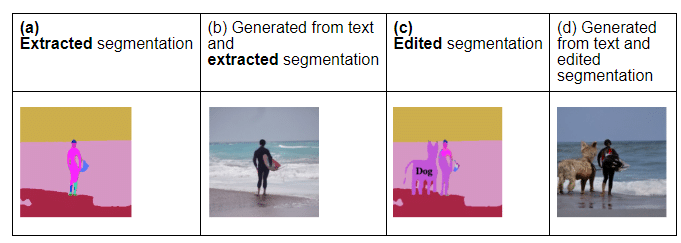

Beyond that, Make-A-Scene is also able to edit scenes (Figure 6). Given an input text and the segmentations from an input image, Make-A-Scene can be used to regenerate the image. For example, given a prompt and the extracted segmentation (Figure 6A), Make-A-Scene could generate the required image (Figure 6B). Given the same prompt and an edited segmentation (Figure 6C), the generated image now contains new content that contains a dog (Figure 6D).

Figure 6. Given the text prompt “a man in a wetsuit with a surfboard standing on a beach”, Make-A-Scene can be used to edit the image to include a dog. (Source)

3. Physically-Based Editing of Indoor Scene Lighting from a Single Image

The task of editing the lighting of an indoor scene from a single image is challenging. To do so, one has to attribute the effects of visible and invisible light sources on various objects. This requires sophisticated modeling of light and the disentanglement of HDR lighting from material and geometry with an LDR image of an indoor scene.

To tackle this problem, researchers from Adobe, UC San Diego, and Carnegie Mellon University proposed Physically-Based Editing of Indoor Scene Lighting from a Single Image. Such a novel approach allows the lighting of an indoor scene from one LDR image to be edited in a globally consistent manner.

The framework first estimates the physically-based light source parameters for lights that are in or out of the image. It then renders the contributions and interreflections of the light sources through a neural rendering framework.

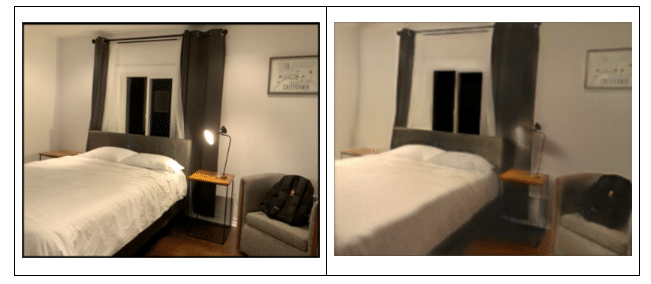

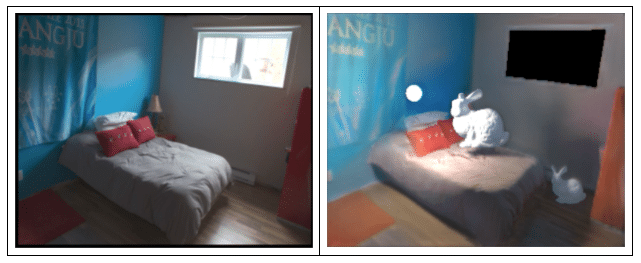

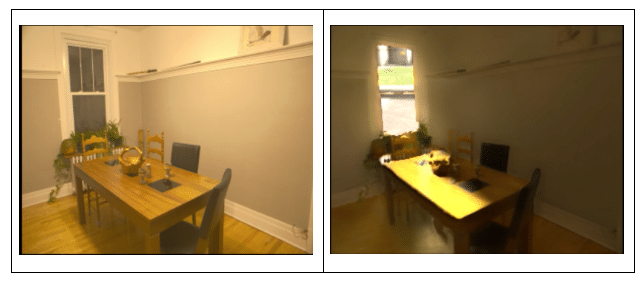

The results are photorealistic images that resemble the ground truth. The framework can switch on or off light sources in an image (Figure 7). Not only that, it can add objects at any location in the room and necessary adjustments to the shadows and highlights in the room (Figure 8). The framework can also add virtual light sources (like a lamp) or even open a window to let light into the room (Figure 9).

Figure 7. When the method is used to switch off a light source present in the original image (left), the image on the right is obtained.

Figure 8. Given the original image (left), the method can switch off a light source and add an object (right).

Figure 9. Given the original image (left), the proposed method can add a virtual window with high fidelity (right).

Stay tuned for part II when we review 3 more papers from ECCV 2022!