Solving Autonomous Driving at Scale

For the fourth episode of his podcast, Unboxing AI, Datagen CTO and co-founder, Gil Elbaz sat down with Vijay Badrinarayanan to talk about Wayve’s end-to-end machine learning approach to self-driving and more.

Vijay Badrinarayanan is VP of AI at Wayve, a company pioneering AI technology to enable autonomous vehicles to drive in complex urban environments. He has been at the forefront of deep learning and artificial intelligence (AI) research and product development from the inception of the new era of deep learning driven AI. His joint research work in semantic segmentation conducted in Cambridge University, along with Alex Kendall, CEO of Wayve, is one of the highly cited publications in deep learning. As Director of Deep Learning and Artificial Intelligence (AI) at Magic Leap Inc., California he led R&D teams to deliver impactful first of its kind deep neural network driven products for power constrained Mixed Reality headset applications. As VP of AI, Vijay aims to deepen Wayve’s investment in deep learning to develop the end-to-end learnt brains behind Wayve’s self-driving technology. He is actively building a vision and learning team at Mountain View, CA focusing on actively researched AI topics such as representation learning, simulation intelligence and combined vision and language models with a view towards making meaningful product impact and bringing this cutting-edge approach to AVs to market.

This transcript has been edited for clarity and length.

Listen to the full podcast here.

In This Article

AV 1.0: First-Gen Autonomy

Vijay: AV 1.0 is probably the first generation of autonomous vehicles on the road. And today I’m extremely happy to see many amazing trials happening here and in many other parts of the world. It’s a massive achievement and massive kudos.

It’s been a hard slog for over a decade to get to this point. I think that AV 1.0 is really predicated on the fact that we’ve got to get perception to a really high degree of accuracy, and the rest of it, prediction and planning, which are really the three main components of any of these AV stacks.

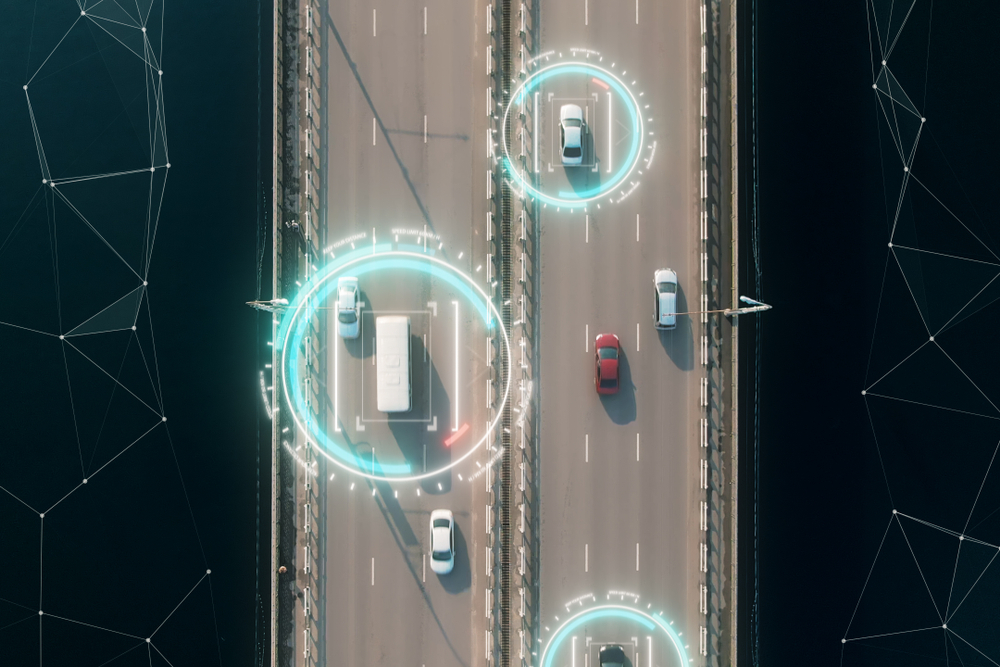

Perception is really the labeling problem. You have a bunch of images coming from multiple sensors. It could be radars, could be LIDARs, could be RGB cameras.

How do you identify the key agents and the static structures in a scene? For example, you want to know where the lanes are, where the lane markings are, the traffic lights, cars, other vehicles, pedestrians, trees. The classic problem of detection and segmentation.

Prediction is really understanding the trajectories of objects, moving objects. So you want to know, for instance, where that agent will be in the next few seconds. And based on that, plan your trajectory. When you say your trajectory, it’s really the vehicle or the autonomous vehicle’s trajectory.

Planning, of course, is taking all of this information from perception and prediction, and then coming up with the next trajectory, or options of multiple trajectories at which the execution happens using a controller.

The key characteristics of really being hardware-intensive, are driven by perception metrics. Really, their end task is planning and control, but in practice you’re optimizing for another task, which is perception–with the justification that you need to see an object quick enough that you have enough time to react. Now, that creates these modular stacks.

So there are a bunch of engineers working on making lots of different neural networks for perception. Then there’s a prediction group, and then there’s a whole planning group. And now obviously you need to stitch them all together. So there are interfaces. So as you change one, then you need to rework the interfaces, right?

So there’s just more engineering effort. The other issue is, how do you propagate uncertainty in terms of inference, downstream from perception all the way to planning, right? It’s not very clear. How do you do it?

In general, you get caught in this microcosm of techniques to improve perception, lots of different loss function engineering, architecture engineering, all of this kind of stuff happens there, right? So these are all issues which motivate AV 2.0.

Wayve’s AV 2.0 Approach

Vijay: AV 2.0 is a bold approach. Two highlights are a very lean sensor stack–just cameras and radars–and really unlocking the potential of machine learning where you don’t have to rely on crutches, like localizing to an HD map, but really making decisions based on how the scene is really evolving. And of course, by training on enormous amounts of data.

Fundamentally, AV 2.0 is something which is being pioneered by Wayve. It is driven by the very strong belief that, in the future, it is indeed possible to train very robust, very performant and safe neural networks, which can really control the autonomous vehicle and not be so reliant on the specific geometry itself. So, I mean, it could be applied to heterogeneous vehicles, whether it be cars or vans or trucks, for instance, with different geometries.

Now there are two principles which we work on within AV 2.0. One is, how do you plan? We spoke earlier about the modular kind of stack, perception, prediction and planning which happens in AV 1.0.

In AV 2.0, we’re really thinking about how to do this in an end-to-end way. You have images from multiple cameras, which go into a neural network, and then outcomes, control decisions, meaning, this is how much you should be speeding and this is how much you should be turning. These are the kind of control decisions we make.

We develop many different techniques within this end-to-end planning approach. And that’s where things like imitation learning, reinforcement learning, all of these very new things come into play.

Listen to the full podcast here.

Addressing the Long Tail Problem in AV

Vijay: Now really the heart of the autonomous vehicle project is, how do you tackle this long tail of scenarios? A long tail is basically more and more rare scenarios you could encounter. For example, a child running from behind a vehicle into the road, or suddenly encountering roadworks, with new signs, etc., or completely different weather conditions, these are all examples of long tail scenarios. A long tail is whatever you can imagine that could possibly happen on the road when you’re driving from point A to point B.

And as we discussed, using Data-as-Code or programming by data, you have to create this data. Now, the way we do this is really a multi-step approach. The first step is, we created a hardware platform. We also supply hardware platforms, meaning sensors and the compute stack all together, to our partner fleets. And the partner fleets are constantly driving all around the UK. So we’re really gathering a lot of data of scenes and also actions: what a human does.

This is just human driven data. And you can get a ton of this and this really powers our training of neural networks.

The other kind of data is what we call on-policy data, which is a model actually driving the vehicle. And you have human operators sitting behind the wheel, watching out for any mistakes. And as soon as the model makes a mistake, they take over and correct it. This is also very valuable data. This basically tells us what the model does not know to do very confidently. So we have expert data and we have on-policy data; these are two data sources from which we’re bootstrapping the training of the models.

The Future of AI and Advanced Approaches

Vijay: Resimulation is one of the big things. And there are very interesting connections between our planning approaches and simulation as well, that our methodology is like imitation learning, which is thought to be, you need a lot of data from different viewpoints, what we call states and actions. States are like different images from different viewpoints of where the ego vehicle is. But all of this can actually be rendered now using the latest technologies like ADOP or NeRFs; if you want to know what would happen if the car was placed in a different position on the road, you can actually get that.

These things are also beginning to power our approaches to actually plan, to train our planners. So the investments are getting deeper into both adding realism, diversity, and dynamics to personal simulators, but also using resimulation for both validation, counterfactual, but also actually training partners as well.

You want this end-to-end neural network to know many things about the world. It needs to know about the 3D structure of the world. It needs to know about objects, that they are permanent. They don’t just vanish between frames. There’s a smoothness of motion, but you also want to know that the geometry consistency across poses, if you look at objects from different ways, there’s a regularity to all of these things.

So I’m really excited to see all of these different reconstruction, self-supervised tasks come up because it feels to me like we can use that to train even better representations on which you can actually train planners very quickly.

Listen to the full podcast here.