VOS: Learning What You Don’t Know

Motivation:

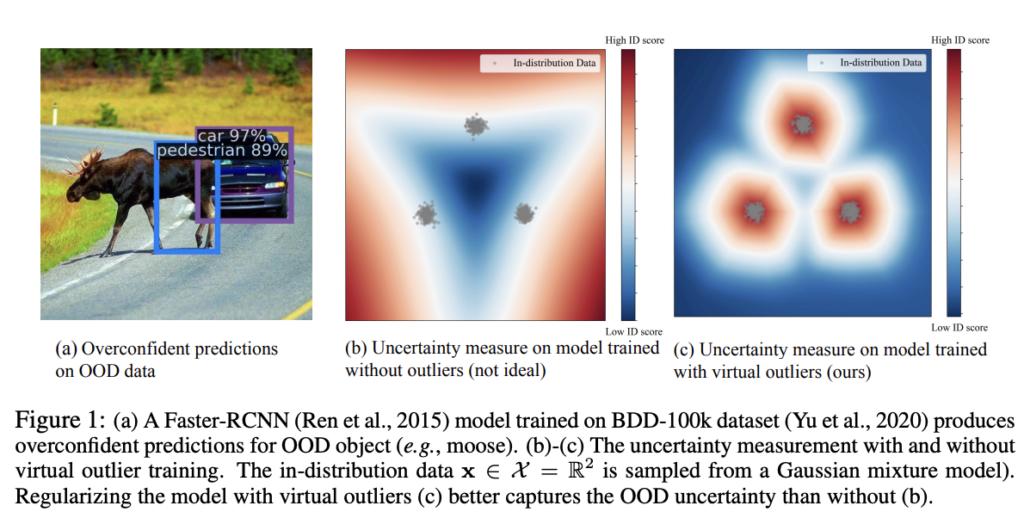

Deep learning models’ safe deployment in real-life scenarios requires accurate detection of out-of-distribution (OOD) data. Deep neural networks (DNNs) are usually trained under the assumption that training and real world data distributions coincide. Real-world tasks, however, fail to uphold this assumption, leading to erroneous and high-confident predictions for OOD data. Simply put, the absence of OOD examples during training leaves the deployed model performance unpredictable to OOD inputs. Therefore, methods that can reliably identify OOD inputs are vital for safe deployment of DNN models in high-stakes applications such as healthcare and autonomous driving. This phenomenon is illustrated in Fig.1, where a moose is mistakenly identified as a pedestrian with a high confidence.

The paper develops an OOD detection mechanism which is trained during neural network optimization through constructing synthetic OOD samples. The authors propose to leverage these synthetic OOD samples to train a model to recognize OOD samples along with optimizing it (model) on an in-distribution (ID) task (classification and object detection).

Leveraging the developed OOD detection mechanism, we can specify and characterize edge cases and under-represented regions of the data. With this knowledge, we may be able to improve our coverage of these regions. For example, synthetic data can be generated to cover these problematic areas.

Main idea of the solution:

Previous approaches leverage generative models like Generative Adversarial Networks (GAN) models to generate OOD samples in the image space. Such models are often difficult to train, and the selection of an optimal training set can be challenging as well. In order to cope with these flaws, the authors propose to generate OOD samples in more tractable low-dimensional feature space rather than the original high-dimensional image space. Synthetic OOD samples are generated from low-likelihood regions in the feature space for every image category. Simply put, OOD samples are generated in the feature space areas located far away from the in-distribution samples for each class.

Then the network is trained to distinguish between the generated OOD samples and in-distribution samples and is simultaneously optimized to perform the ID task (classification and object detection). Note that the OOD detection task is framed as a binary classification problem performed in the feature space. Thus, in addition to a bounding box and class scores distribution, a neural net produces a so-called uncertainty score for every detected object of an input image. This uncertainty score attains small values for ID samples and larger values for OOD samples allowing for an effective OOD samples detection.

Learn more about image annotation.

Technical Explanation:

Let’s discuss the synthetic outliers generation procedure. As it was already mentioned, these outliers are constructed in the low dimensional feature (representation) space. To obtain an image representation, an image is first fed into a backbone neural network. Then the result is processed by a bounding box proposal generator to obtain feature (representation) vectors for every detected object.

Next, in order to generate outliers in the feature space, the training set feature distribution should be estimated. Feature distribution is assumed Gaussian (discussed in the next section) and is estimated separately for each class (category). Finally synthetic outliers (OOD) are generated for each class in low-probability regions of the estimated class features distribution. In simple terms, feature space vectors located far away from the average feature value for the samples from a given category are sampled randomly to form synthetic outliers.

Assuming clusters formed by features of samples from all in-distribution categories are sufficiently separated, these generated outliers “correspond” to samples that do not belong to any ID category in the original pixel space. Then DNN is trained to distinguish between the outliers and ID samples by giving low uncertainty scores to the outliers and high scores to the ID samples. A sample uncertainty score can be viewed as the reciprocal of a sum of the probabilities of this sample belonging to each ID category. If this sum is low, the sample is likely an outlier whereas ID samples are characterized by higher values.

Possible Shortcomings/Insights of VOS:

The main flaw of the proposed method is the Gaussian assumption of the feature vectors distribution for every category. Using this method for the cases when this assumption does not hold (e.g multimodal), may lead to generation of “false” synthetic outliers. The use of “false” outliers to train the OOD detector can cause ID samples to be incorrectly identified as OOD, thereby degrading the performance of both the ID task and the OOD detector. A possible way to overcome this issue is to impose Gaussian distribution on category feature distributions. It can be achieved by adding distance between feature and Gaussian distribution, such as Kullback-Leibler divergence or Earth Mover distance to the loss function.

An important aspect of the proposed method is the dimension of the feature space. Being too low may affect the “representativity” of the feature space resulting in inadequate coverage of the generated outliers. On the other hand, if the feature space dimension is too high, a high amount of synthetic outliers will be required to cover the entire OOD region. If we generate an insufficient number of such outliers, the boundary learned by the classifier won’t be good enough to distinguish ID samples from OODs.

Summary:

The proposed method constitutes an efficient tool for detection of under-represented regions in the test data by learning rejection areas with respect to each category. Furthermore, synthetic data generation process is optimized through focusing on the problematic under-represented regions of the data distribution.

Final Note: Employing VOS approach for a trained network w/o retraining?

If you already have a trained network, synthetic outliers can be generated with the proposed approach. Then another model can be trained to differentiate between ID samples and these generated outliers.