Why is my AI model not working?

This is the first in a three part series about what can causes deficiencies in AI models.

In this blog post, we will explore one of the common causes of model deficiency: annotation or label errors. Training an AI model requires large amounts of data and, in many cases, this data is collected and annotated by different people. This can lead to inconsistent labels and systematic errors, which can negatively impact the performance of the model. We’ll discuss examples of inconsistent labels, such as ambiguous cases and inconsistent training data, and systematic label errors, such as human biases in collecting or labeling data.

Let’s get started!

Let’s say we have a model trained on real data (it can also be an off-the-shelf pre-trained model) reaching a certain level of performance on our test set and we would like to further improve the model by adding data during the training phase. Where can we look to find how to improve the model?

Let’s review one of the common causes for model deficiency:

Annotations or Label Errors

Inconsistent labels

Labeling data accurately and consistently is very challenging. We usually need huge amounts of data and therefore, we split annotation tasks into subtasks sent to different annotators, leading to significant inconsistency.

In many annotation tasks, there are ambiguous cases which need to be carefully defined (e.g. what are the exact object boundaries, whether and how to annotate small objects, annotating partially occluded objects etc.). As we would expect, this inconsistent training data, fed into the neural network during training, will actually confuse the network and interfere with the learning process. If the network “sees” the same type of samples multiple times but each time with different labels, it will make it difficult to converge and generalize. Imagine a student is shown the same object twice but it’s defined as “cat” the first time and as “dog” the second time. It will be impossible for the student to generalize and understand what is the truth. See some real examples here.

Read Part 1 of the Big Book of Synthetic Data: Why is my model not working?

Systematic label errors

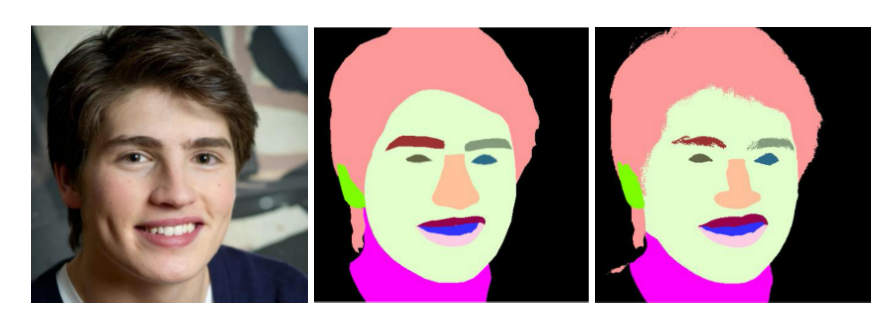

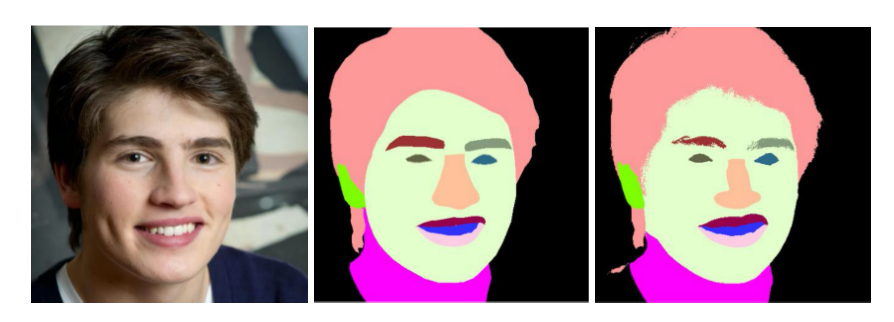

Sometimes there are systematic errors across the training set, meaning that consistent human biases are inserted when collecting or labeling data. These types of errors will be inherited by the model. This is very common in any fine-grained classification task. For example, if the annotator systematically identifies twins as the same person, the network will not be aware of having very similar people who are actually two different people. Another common example is related to labeling segments of complex objects like human hair. Annotating pixel-perfect hair segmentation is a prohibitively expensive task and therefore human annotators will provide a rough segmentation which best fits hair boundaries. When we use these annotations in training, naturally we’ll not be able to learn pixel accurate hair segmentation. In the example below, taken from the CelebA dataset, we see two different labeling methods. In the first one, the hair and eyebrow segments are roughly marked while in the second one we see the very fine details of the hair and eyebrows. Naturally, the pixel-accurate segmentation for hairy segments can only be achieved with pixel-accurate annotation.

A nice blog describing additional errors found on widely used large datasets can be found here.

To reduce this inconsistency, in some cases, the same data is sent to multiple annotators and a majority voting process is applied to decide the final annotation.

Read Part 1 of the Big Book of Synthetic Data: Why is my model not working?

Orly Zvitia is Datagen’s Director of Artificial Intelligence. She has over 15 years of experience in computer vision and machine learning both as a manager and a researcher. Orly has a track record of leading challenging computer vision projects starting from initial ideation to full productization for world leading corporates and startup companies. She works to bring Datagen’s synthetic data revolution to the world-wide computer vision community.