As Computer Vision Explodes, Data Collection Needs To Change, Too.

Nowadays, there’s a lot of talk about Computer Vision and its potential impact on a wide range of fields and applications. And it’s not just talk; spending on Computer vision R&D is rapidly growing, with an estimated CAGR of 31.6%. The ability of cameras and computers to not just see but understand the world around them can transform fields from IoT to smart cars and smart cities to manufacturing.

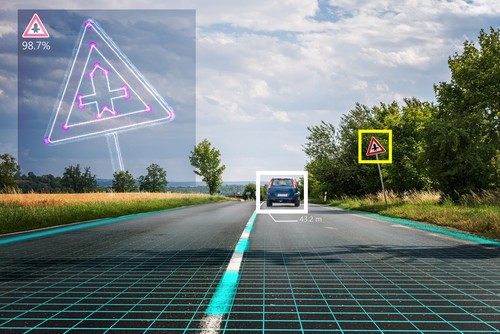

Imagine smart stores that understand what you’re grabbing from the shelf and calculate your purchases in the background. VR and AR technologies without clumsy hand-held controllers, responding more intuitively to your body. Systems that understand emotions and facial expressions, safety mechanisms that stop a car if there’s someone crossing the street, smart security systems that can understand when something’s amiss. The applications are unlimited and will change the way we live.

But, despite enormous advances in computer vision research and GPU power, transformative technology seems to be arriving slowly and with plenty of serious challenges.

When we started Datagen, we began by asking, “Why? What is holding back progress in this field?” After conversations with engineers and researchers working on human-centered smart computer applications, a theme emerged. State-of-the art algorithms and GPU are just 2 of the 3 ingredients needed to bring this technology to market.

Without the third ingredient – data – these developments are destined to stall. Again and again, we heard that a lack of access to data is slowing the pace of development in computer vision. Teams simply don’t have enough high-quality data to train their growing neural networks.

Computer vision teams are spending too much time, money, and energy obtaining annotated datasets that, despite the effort required, are still deeply flawed. We call these datasets Manual Data; data points are manually captured from the real world and then, for the most part, manually annotated. Here are some of Manual Data’s main problems:

Read our Survey on Synthetic Data: The Key to Production-Ready AI in 2022

Manual Data is slow

When you’re reliant on event frequency in the real world or have to collect existing data from disparate sources with complicated privacy or access considerations it can take a long time and lots of resources to build a sufficiently large and representative dataset. It can take months to just collect the data, and even longer to annotate it correctly. For highly complex use cases and very specific training needs, the process can be so slow as to be infeasible. The alternative – manufacturing scenarios through play acting – presents a new level of logistical complication that many computer vision teams are ill-equipped to handle. And, as needs change and networks need to be retrained, this slow process must be repeated.

Manual Data is biased

High-level bias – over- or under-representation of key situations, items, or demographic groups – can limit the effectiveness of systems and is extremely hard to control when you are subject to resource and access limitations. Often you don’t know about these biases until you’ve collected your data or trained your system. But, computer vision systems that lose accuracy in key population segments, environmental conditions, or situations can not be put into production.

Manual Data cannot cover all existing variants and edge cases

To train algorithms correctly we need to capture rare and potentially dangerous occurrences. For instance, when training autonomous vehicles we need training data with near accidents, and driving in dangerous conditions. But, these incidents do not happen frequently enough to supply enough data. Nor are they cases that we can pay actors to recreate.

Manual Data is limited

Because annotations have to be added after data collection, and the process is often done manually at scale, it is often inconsistent, slow, and lacks 100% ground truth. This is especially true for annotation of motion/video data, where annotations must be added frame-by-frame. There are certain data layers – for instance, detailed depth maps – that are impossible to add by hand.

Furthermore, obstructions, complex poses, and camera blocking may make accurate annotation impossible. The need for complexity within computer vision training data has grown sharply, but human annotators are not developing super-powers in tandem. As object detection has given way to semantic segmentation, instance segmentation and panoptic segmentation, successive levels of annotation become more complex and time consuming. New technologies are needed to keep up.

Datagen was founded to provide an alternative to the status quo. Let us explain.

Datagen creates high-quality, human-focused Simulated Data to fuel smart computer vision learning algorithms and unblock data bottlenecks. Our data is photorealistic, scalable, high-variance, unbiased, and has all kinds of superpowers. In other words, it’s a better, smarter alternative to Manual Data. When done well, Simulated Data can provide that third, missing ingredient to the computer vision puzzle and unleash the potential of this fast-growing field.

The promise of synthetic data has been clear since its inception for many of the reasons why Manual Data is flawed:

- Speed: Simulated Data can be generated on demand with a full range of customization capabilities, eliminating the dependence on real-world event frequency. This allows for iterations to happen faster.

- Bias: Simulated Data can provide full transparency into the contents of training data, meeting the exact distribution needs required to train an accurate network.

- Edge cases: Whether simulating dangerous situations or hardware that’s not yet in product, Simulated Data can supplement and strengthen datasets by providing more variance.

- Data richness: For the most advanced applications of computer vision, data needs to be annotated pixel-accurately. This cannot be done by humans. For visual recognition, manual annotation like class labels and bounding boxes can suffice. For dense tasks such as depth estimation or optical flow, manual annotation isn’t good enough. Using synthetic data, we have perfect knowledge and control of the data we create and therefore perfect ground truth annotations along with the images. And, since they’re built into the simulations from the beginning, they’re consistent throughout.

An additional note. Increasingly, there are many concerns about privacy in the context of computer vision data. In a post GDPR world, companies need to be cautious about data they collect and use. By creating a fully simulated dataset that mimics real-world statistical patterns, synthetic data enables training without compromising privacy.

Read our Survey on Synthetic Data: The Key to Production-Ready AI in 2022

Already, outside the realm of computer vision, where networks rely on non-visual data, synthetic data has shown promise across a range of industries from medical research where patient privacy is tantamount to fraud detection where synthetic datasets can be used to test and increase the robustness of security solutions.

Its use in a computer vision context is just beginning to catch up. Recently, researchers have successfully been proving the efficacy of synthetic data. In this paper, researchers at Google trained an object detection model on synthetic data — supermarket items — that outperformed one trained on real data. Using the SYNTHIA dataset for autonomous vehicles, training proved that a combination of manual data and synthetic data performed better than manual data alone. Engineers at Unity have made the case for synthetic data and shown it’s ability and advantages. Leading companies in different fields have recognized the promise in synthetic data. Waymo drives 20 million miles a day in it’s carcraft simulation platform comparable to a 100 years of real-world driving. Nvidia has trained robots to pick up objects using synthetic data. DermGan, a generator of synthetic skin images with pathologies has helped diagnose skin diseases.

We, at Datagen, are contributing to this research and validation as well.

Skepticism of synthetic visual data is still prevalent. The question is whether these visual datasets can effectively capture and reflect the physical world with enough accuracy and variance to achieve comparable results. Can simulated data express statistical minutiae especially with few physical reference points? With Computer Vision applications becoming more common and more sophisticated these questions are ever more pressing.

If synthetic data can present an alternative to manual data by addressing these concerns, and provide datasets that are at least equal (if not superior), it has the potential to dramatically affect the process of developing algorithms. Faster and more efficient algorithms can help deliver the promise of computer vision to our world even sooner.

That’s why, at Datagen, we are building Simulated Data solutions for computer vision network training.

We’ve explained the advantages and promise of synthetic data and the drawbacks of manual data. As you might have noticed, we refer to our work as Simulated Data. So, let’s conclude with an explanation of Simulated Data and how we distinguish it from synthetic data.

Synthetic data is usually defined by negation; data that is not manually gathered. There are numerous methods to create it, from advanced Generative Adversarial Neural Networks (GANs) to more simple methods such as copying an image on to different templates to generate variety. These methods are not equal and some are very limited. The visual datasets generated are huge, and cumbersome to play with. Generally, they are designed for a specific task or single scene. They don’t allow our models to learn like we do – responsively and rapidly. Fundamentally, they are static and have to constantly be updated and generated to mirror new characteristics, targets, and domains. With the development of better and better Neural Networks, our data needs to be on pace.

Simulated data is synthetic data brought to life. Rapid developments in CGI enable more efficient creation of photorealistic imagery. Our simulations leverage the most sophisticated rendering techniques throughout the entire 3d production pipeline. Virtual cameras let us “photograph” physics-based photorealistic simulations, along with their ground truth annotations. We integrate layers of algorithmic work and custom models which allow us to create at scale without sacrificing realism.

Simulated data isn’t detached from reality. On the contrary, it is based on real-world physics and 3d modeling. To this end, Datagen is compiling and creating one of the largest libraries of 3d scans, with a special focus on humans. This helps us make sure that together with variance and breadth of examples we don’t lose realism. Simulations let us swap features, like the lighting, backdrop, and time of day. We can simulate edge cases that cannot be captured manually. We can change the ethnicity and age of our models. Our images can be displayed as infrared, or with depth maps from different angles or with different capturing lenses. These graphic tools are coupled with avoiding the pitfalls of manual data; the cost of data collection, privacy issues arising from human-focused data, and the inherent bias of manual datasets. Advances in Computer Graphics allow our simulated data to create a customizable, realistic 3d environment. This environment has a dynamic mix of people, objects, and space, all based on high quality, photogrammetric 3d data.

At Datagen, synthetic data is an initial stage towards the next generation of computer vision training datasets. Simulated Data is the future. We intend to continue our work, and truly simulate the real world with photorealistic recreations of the environment around us. For us, synthetic data isn’t enough. We are excited by the potential that Simulated Data has, and look forward to sharing it.

Read our Survey on Synthetic Data: The Key to Production-Ready AI in 2022