Five Takeaways from GTC 2021

Nvidia gave us a glimpse into the future of AI in its GPU Technology Conference (GTC) 2021. Here, we summarize five key takeaways from the conference.

1. Omniverses are interconnected virtual worlds

Talks of metaverse dominated headlines recently – and for good reasons. In the future, we’ll be able to instantaneously teleport across virtual worlds just as easily as we hop from one webpage to the next today. At GTC 2021, Nvidia showed the world how its Omniverse is more than a game engine or social network and how it can revolutionize industries like entertainment, architecture, and even engineering at scale.

The Omniverse is a scalable, multi-GPU, real-time reference development platform for 3D simulation and design collaboration. It leverages Pixar’s open-sourced Universal Scene Description (USD) as its scene representation framework. Originated to meet the demands of large-scale 3D visual effects production, the USD has composition features for combining individual assets into larger entities and acts as an interchange between digital content tools, allowing assets to be transmitted across applications.

The Omniverse has 5 key components that power its capabilities:

- Nucleus (its database and collaboration platform),

- Connector (for connecting asset libraries to the Omniverse),

- Kit (for developers to develop their plugins and microservices for the ecosystem),

- Simulation (for simulating physics in the Omniverse), and

- Renderer (for generating the images).

The Omniverse is built with a modular development framework, making it customizable and extensible. This means that developers can build or use existing extensions, apps, connectors, and microservices on the Omniverse. Here are just a few examples:

Replica Studios is an Omniverse extension for developers to create realistic voiceovers for synthetic characters (Source)

NVIDIA Drive Sim is an Omniverse app for end-to-end simulation of autonomous vehicles (Source)

Examples of Connectors available on the Omniverse (Source)

Watch On-Demand “Implementing Data-Centric AI Methodology” with Microsoft and Meta

In GTC 2021, Nvidia showed off Omniverses (“digital twins”) of virtual factories: spaces where robots are designed, trained, and continuously monitored. Most notably, Nvidia developed a factory digital twin platform with Siemens Energy. Built to predict corrosion in steam turbines, this digital twin can accurately simulate steam physics thanks to the Modulus Physics-ML framework. Such predictive capabilities cut factory downtime by 70% and bring the industry $1.7 billion per year.

Simulating the flow of steam using Modulus in the Omniverse (Source)

2. Machine learning will allow for better physics simulation, accelerating scientific discovery

The arrival of ever-faster chips and computing systems over the past decade has afforded highly parallel deep learning, turbocharging the development of science. In particular, the application of machine learning on physics simulation (Physics-ML) helps to solve previously intractable problems in the sciences, such as drug discovery and protein folding.

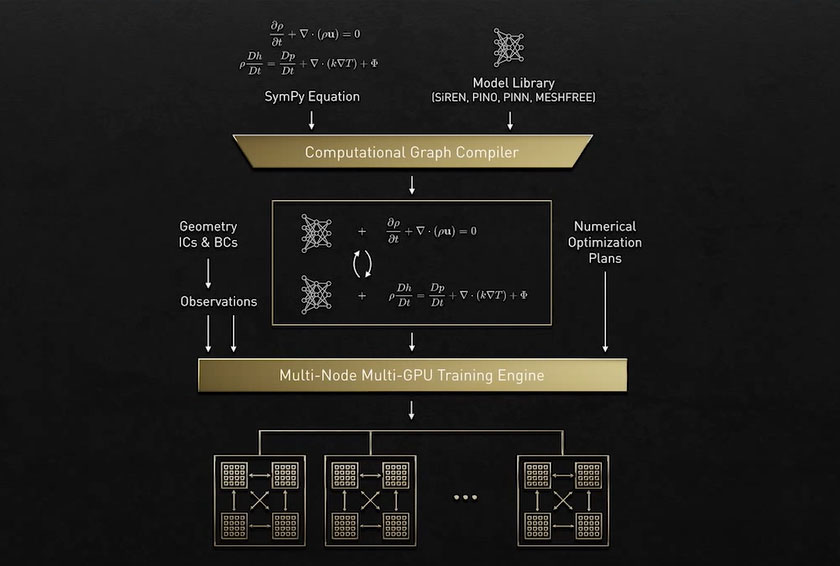

That is why the announcement of Modulus in GTC 2021 is significant. Modulus is a framework to train neural networks using the fundamental laws of physics, allowing for the behavior of complex systems to be modeled accurately. Compared to traditional simulations, the resulting models from Modulus are 1,000 to 100,000 times faster.

Modulus is powerful thanks to the following elements:

- A sampling planner that enables the users to customize the sampling approach.

- Python-based APIs for building physics-based neural networks

- Network architectures with a track record of solving physics problems.

- A physics-ML engine that trains the model using PyTorch and TensorFlow on GPUs.

The Modulus framework in a nutshell (Source)

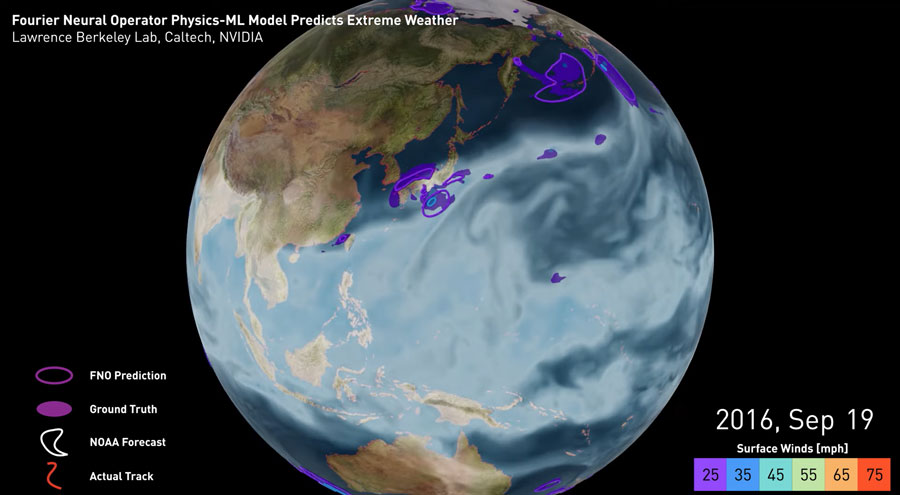

Simulating climate change accurately over long horizons is challenging due to the multitude of variables involved. Recently, Modulus enabled a Physics-ML Model which is capable of providing a 7-day forecast of hurricane path and severity in a quarter of a second.

Modulus allows for accurate and quick hurricane severity predictions (Source)

In the future, Modulus is a stepping stone towards Nvidia’s ambitions in performing large-scale high-resolution climate change simulations. At GTC 2021, Nvidia revealed its plan to build a supercomputer Earth 2. Dedicated to creating the earth’s digital twin, Earth-2 will enable accurate wind energy forecasting, extreme weather prediction, and disaster mitigation. “All the technologies we’ve invented up to this moment are needed to make Earth-2 possible,” Huang quipped, “I can’t imagine a greater or more important use.”

Modulus is a stepping stone towards building the earth digital twin in the Omniverse (Source)

3. Hardware and software developments are accelerating NLP and IoT

At GTC 2021, Nvidia also showcased the development of its existing NLP and edge device platforms.

Natural Language Processing (NLP)

The NLP space has seen tremendous progress in the past decade thanks to the rapid development of novel, cutting-edge architectures such as the seq2seq and the transformer models. Today, such architectures form the backbone of large language models like the Megatron 530B, which are trained on petabytes of data and have trillions of parameters.

Given the growing demand for ever-larger large language models, the unveiling of the NeMo Megatron framework is timely. Built on advancements from the Megatron, The NeMo Megatron holds promises to speed up the development of large language models (LLMs) with efficient data processing libraries and highly distributed training.

Practitioners lament the long inference time of LLMs that limit their utility in making real-time inferences. The Triton Inference Server addresses such a gap with its new multi-GPU, multi-node feature. LLMs can now distribute inference workloads across multiple GPUs, making it feasible to deploy LLMs in real-time applications where quick inference is a top priority.

Learn More about NeRF

Edge Devices

Today, edge devices help e-commerce giants manage inventories in warehouses, governments direct traffic in smart cities, and manufacturing companies perform optical inspection in factories. Using AI to analyze the deluge of data from trillions of these cameras in real-time is no easy task.

The Metropolis simplifies that process. It is a platform to develop, deploy and scale AI-enabled video analytics on the edge. More concretely, Metropolis leverages streaming video to infer 3D poses and reconstruct 3D scenes. NVIDIA demonstrated how sensors in a space integrated with Metropolis control a robot with gestures, and even reconstruct a 3D scene with a neural radiance field instantaneously. The growing ubiquity of 5G networks will further fuel the growth of edge devices, enabling more extensive video analytics on the edge.

Metropolis enables instant 3D Scene Reconstruction (Source)

4. Our digital personal assistants are becoming smarter

Some people cannot imagine their lives without the likes of Siri and Alexa. Soon, personal assistants will be more than just a voice that lives on our devices. Instead, they will be full-fledged avatars who can understand user intent, converse on a vast array of topics, and provide spot-on recommendations.

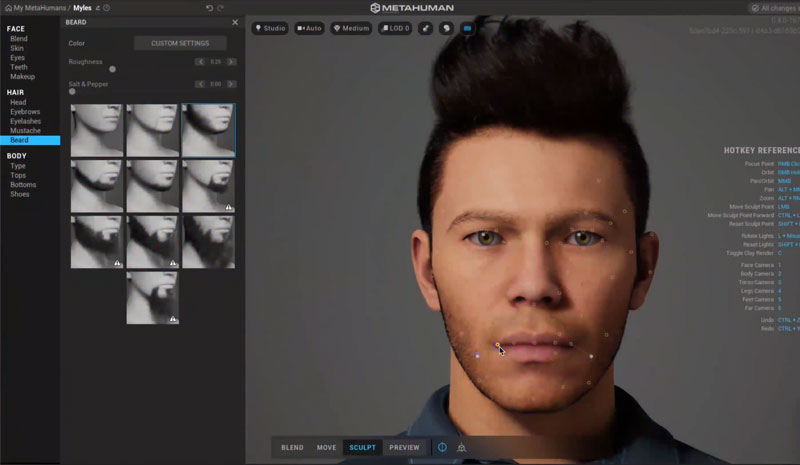

Omniverse Avatar is one such futuristic personal assistant. It is the culmination of technologies in speech AI, computer vision, natural language, recommendation engines, and simulation technologies.

An avatar version of CEO Jensen Huang stole the spotlight at the GTC 2021 keynote speech. When asked questions on complex topics on biology and astronomy, Toy Jensen gestured and answered naturally – in a voice that was practically indistinguishable from Huang’s.

Toy Jensen stealing the spotlight at GTC (Source)

Those looking to build surreal Omniverse Avatars will not be disappointed with Project Maxine, a software development kit (SDK) that allows realistic avatars to be built from the image of a person. Soon, Maxine will be able to reconstruct digital faces based only on audio inputs.

Project Maxine generates photorealistic avatars (Source)

Nvidia further elaborated that Avatar is made possible with Riva, the speech AI software that powered Omniverse Avatar’s speech capabilities. Riva is a jack-of-all-trades in understanding natural language – it can close-caption, translate, summarize, answer questions, and understand user intent in 7 languages today. Impressively, Riva can be tuned to a specific voice with only 30 minutes of training. In GTC 2021, Nvidia announced the public release of Riva in 2022 to the delight of its audience.

5. Better hardware and simulated data are the fuel of the robotic revolution

Robots are becoming increasingly indispensable. Today, e-commerce giants like Amazon deploy robots in their giant warehouses to help fulfill the voracious demand for online shopping. Cleaning robots, last-mile delivery robots, and even restaurant and retail robots are also growing in popularity.

Robots are complex. They need to perceive the environment, reason about their location, and develop a plan to fulfill their goals in real-time. As such, robots need powerful hardware to sense their environment efficiently and make decisions quickly.

At GTC 2021, Nvidia announced its new robotics chip, Orin, that can process the robotics pipeline – including processing sensor data, simulating physics, performing AI predictions – on one single chip. Equipped with 12 Arm CPUs, 5.2 TFLOPS of FP32, 250 TOPS for AI, the Orin chip is a very small yet energy-efficient AI supercomputer for robotics. This chip forms the building block for the Holoscan platform, an AI computing platform that brings real-time sensing to medical devices.

Training robots with simulated data

Robots need to learn to make inferences and decisions by training them on large amounts of labeled datasets. However, such datasets can be prohibitively expensive to label or impossible to obtain.

As such, NVIDIA leverages its Omniverse to create an environment simulator called Isaac Sim to generate data for robot training and testing. Isaac Sim is a scalable robotics simulation application and synthetic generation tool for AI-based robots. Isaac Sim faithfully simulates the robot’s sensors, has support for domain randomization allowing for variations (in texture, colors and lighting), and automatically labels the generated data.

The Isaac Sim leverages Nvidia’s PhysX 5 for realistic real-time ray and path tracing, and its Material Definition Language (MDL) for creating and simulating materials. As a result, the virtual environments created are so physically accurate that they are indistinguishable from their physical counterparts. In other words, Isaac Sim strives to minimize the domain gap between the simulated and actual environments.

A virtual environment created for a robot by Isaac Sim (Source)

Generating data for autonomous vehicles (AV)

Training autonomous vehicles to navigate roads requires a massive dataset of on-road images labeled with bounding boxes. The process of hand-labeling real-world images can be laborious, error-prone, and exorbitant. Moreover, real-world datasets might not be diverse enough for teaching AVs to react appropriately to rare and dangerous events.

The Drive Sim Replicator is the solution to AV’s insatiable appetite for large labeled datasets. Built on the Omniverse, the Replicator generates hyperrealistic synthetic data that augments real-world data. The replicator leverages Omniverse’s ray tracing renderer to generate accurate sensor data for cameras, radars, lidars, and ultrasonic sensors at real-time rates, effectively minimizing the gap between synthetic and real-world data.

The labels to such synthetic data come at no additional costs. Better yet, labels are available even in cases that humans find difficult or impossible to label, like high-speed objects, occluded objects, or adverse weather conditions.

Drive Sim Gen Replicator is capable of labeling occluded pedestrians (Source)

The future of AI is Synthetic

“A constant theme you’ll see,” Jensen Huang said in his keynote, “is how the Omniverse is used to simulate digital twins of warehouses, plants, and factories, of physical and biological systems, the 5G edge, robots, self-driving cars, and even avatars.” Nvidia’s vision of the future is of simulated environments that resemble our world today. Having seen the breakneck speed at which cutting-edge hardware and powerful tools are being built, we dare say that the future of living in vast simulated worlds is near the horizon.

At Datagen, we believe the future of AI is synthetic.

Watch now: Implementing Data-Centric Methodology with Synthetic Data

Missed our webinar? Learn from experts at Microsoft, Meta and Datagen as they discuss their recent work with synthetic data.

Click here to watch the on-demand replay.