Highlights from ECCV 2022, Part II

In our last blog, we published 3 of our 6 highlighted papers from ECCV 2022 in part I. We reviewed 3D-Aware Indoor Scene Synthesis with Depth Priors, Make-A-Scene: Scene-Based Text-to-Image Generation with Human Priors and Physically-Based Editing of Indoor Scene Lighting from a Single Image here.

Keep reading to see the last three:

1. Text2LIVE: Text-Driven Layered Image and Video Editing

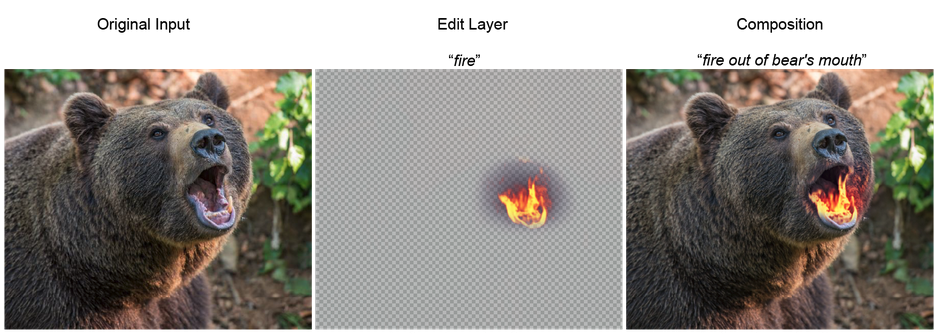

Text-to-image generators like DALL-E demonstrated their ability to generate images from natural language. However, instead of generating an image, can natural language be used as a prompt to edit an image instead? The authors of Text2LIVE presented a solution to that. Given a text prompt, accompanied by an image or video, Text2LIVE can change the appearance of an object (Figure 1) or add visual effects like smoke or fire to the scene (Figure 2).

Figure 1. Text2LIVE can change the appearance of a cake with a text prompt (Source)

Figure 2. Text2LIVE demonstrates its ability to add visual effects to pictures with text prompts (Source)

The authors achieved this by training a generator using an internal dataset that is extracted from a single input. Simultaneously, the authors leverage an external, pre-trained CLIP model to establish their losses. The authors recognized that controlling the localization of the edits and the preservation of the original content are two key elements for a high-quality edit. To achieve that, Text2LIVE uses layered editing, which allows the authors to control the content of the edit via dedicated losses applied to the edit layer. Text2LIVE also devise a new type of loss to preserve the original content of the image and ensure that the edits are localized.

2. Grasp’D: Differentiable Contact-rich Grasp Synthesis for Multi-fingered Hands

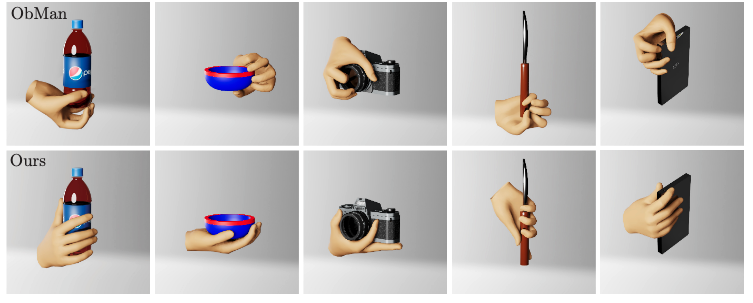

Figure 3. Grasp synthesis by Grasp’D

The task of grasp synthesis involves producing an image that simulates the way a hand comes into contact with an object. It is usually done by optimizing a grasping metric, which can either be analytic or simulation-based metrics. Analytic metrics are handcrafted measures; they are often computationally fast but translate poorly to the real world. on the other hand, a simulation-based metric measures the strength of a grasp by simulating whether the object will drop upon shaking. Yet, this is computationally intensive. Since neither of the analytic and simulation-based metrics is differentiable, optimization is usually a black-box process. When the dimensionality of the search space becomes infeasibly high, it becomes inevitable to make simplifying assumptions to make the black-box process tractable.

The authors thus proposed Grasp’D, a grasp synthesis pipeline based on differentiable simulation. Grasp’D takes in an object and a hand model as input and generates a physically plausible grasp by iteratively optimizing the metric computed by differentiable simulation. As a result, it produces grasps that are contact-rich without any simplifying assumptions. Grasp’D is also able to find grasps that are more stable and have a higher contact area as compared to grasps generated with analytic grasp baselines (Figure 4). Grasps synthesized by optimizing analytical metrics, such as in ObMan in the top row, are unnatural and have sparse contact at the fingertips. On the other hand, Grasp’D generates contact-rich grasps that resemble real human grasps.

Generate Synthetic Data with Our Free Trial. Start Now!

Figure 4. Grasp’D (bottom row) produces grasps that are richer in contact than ObMan (top row), an existing state-of-the-art grasp synthesis method

3. Neural Radiance Transfer Fields for Relightable Novel-View Synthesis with Global Illumination

The task of regenerating a scene from a novel perspective or lighting condition is no easy feat. In the realm of Computer Vision, this inverse task is often accomplished by making simplifying assumptions about the 3D scenes. However, such simplifying assumptions limit existing methods from recovering high-fidelity properties of the scene. Thus, they are unable to re-rendering scenes with global illumination effects.

In the world of Computer Graphics, photo-realistic image synthesis methods explored the use of path tracing to model indirect illumination. In particular, precomputed radiance transfer (PRT) is introduced to efficiently approximate global illumination.

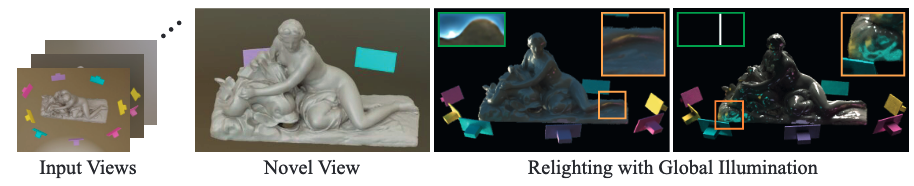

Figure 5. A proposed method synthesizing novel views and relighting with global illumination with multiple views of a scene

The authors of the paper Neural Radiance Transfer Fields for Relightable Novel-view Synthesis with Global Illumination (Figure 5) proposed to combine the learnings from both Computer Vision and Computer Graphics. In particular, this method relights scenes under novel views by learning the neural precomputed radiance transfer function (which determines the global illumination effects of an image).

The results from the paper show superior results to existing state-of-the-art methods. Specifically, the proposed method bested current methods like Mutsuba2, PhySG, and Neural-PIL in the task of novel view synthesis. Not only does the proposed method produce the sharpest and most photo-realistic renders, but it is also the only one that accurately recovered the effects of indirect illumination.

ECCV 2024 and beyond

This year’s ECCV presented a multitude of findings that advance the state-of-the-art of synthetic data. These breakthroughs will form the foundation of tomorrow’s synthetic data research. We look forward to more ground-breaking discoveries in computer vision at future ECCV conferences.