Robotics in the Age of Corona: More Interest, Less Data

We are only halfway through 2020 and, already, COVID-19 is has reshaped how societies around the world navigate their dependence on face-to-face, close-proximity human interactions for everything from providing medical care to operating restaurants to offering social interaction to isolated populations. In an effort to minimize the risks to front-line workers while providing these critical services, companies and governments are increasingly looking to robots for help. From disinfecting hospitals to restocking grocery stores and more, AI-powered robotics can take over a wide range of critical tasks while maintaining social distancing. There has long been interest in the automation of work by robots – a result of both long-term technological development and acute historical events – but COVID-19’s global impact and severity has

accelerated interest in devising new ways that robots can help.

In this post, we explore key areas in which COVID-19, while increasing demand for robot solutions, is simultaneously making these improvements harder to achieve. We’ll also look at some of the ways that teams are overcoming these challenges to meet the needs of the moment.

Computer Vision and Training Data

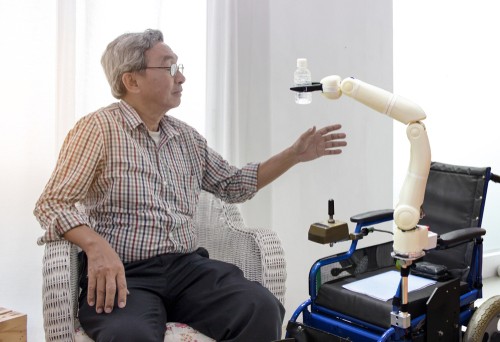

The ability of robotics to supplement or replace human workers in high-risk environments will largely depend on their level of sophistication. Some may serve as interfaces for humans operating them at a distance – for instance, robots that can wheel up to a patient’s bedside to perform simple tasks or allow a physician to speak to the patient through video chat without risking exposure. These robots are often remote-controlled and don’t operate autonomously. Others integrate some form of AI and computer vision technology – for instance, robots that detect human heads for infrared temperature screening. But, the most sophisticated robots use computer vision to enable navigation of complex environments and interaction with both the objects and humans in them.

Corona aside, these computer vision systems that can handle real-world situations and dynamic settings are difficult to build, in part because of the challenges associated with collecting training data. The environments where robotics can be most impactful – hospitals, airports, grocery stores, factories – are highly complex environments. They contain a wide range of objects, a lot of variation in layouts and conditions, and large numbers of humans simultaneously interacting with the environment. First, this makes data messy; occlusion highly likely and the spaces are often large and difficult to capture cleanly with camera arrays. In turn, the messiness of the data and complexity of the environments can make the data difficult to annotate manually. Plus, the dynamism of these environments means that motion (video) data is likely necessary for sophisticated development, which is harder to collect and manually annotate.

Another challenge is that data collection in hospitals, schools, and other non-commercial spaces is complicated by privacy concerns and the intrusiveness of data collection activities, leading some researchers to search for privacy-friendly ways to collect data.

Read our Survey on Synthetic Data: The Key to Production-Ready AI in 2022

Collecting sufficient amounts of data to capture these environments has always been a difficult and expensive task. And, a task that must be done well; computer vision system errors and biases in hospitals can be matters of life and death. But, the opportunities for transformative innovation in these contexts are so great that teams continue to invest millions of dollars in building datasets big enough, diverse enough, unbiased enough, and annotated enough to effectively train their algorithms. All of this is to say that, even before Corona, the process of building computer vision systems designed to navigate these environments was a challenging, expensive, and crucial undertaking.

Corona Complications

Corona is creating a new sense of urgency in the development of smart robot “workers” at the same time as it making this data collection even harder. Due to restrictions on movement and global lockdowns, teams may have a harder time accessing the locations where data can be collected. If teams are able to access these environments, they are likely to be operating abnormally, creating a risk of biased data. This bias trains robotics on data that does not reflect what these environments look like when social-distancing rules are not in effect. For instance, if lower occupancy limits at a supermarket lead to empty aisles and allow stores to maintain well-organized shelves, the data collected may be unable to train algorithms to operate in crowded or messier environments. For teams that are building wearables or other technologies that demand capture of human-object interaction, they may be out of luck completely. There is simply no way to reach and scan, photograph, or record enough people in a time when face-to-face interactions are prohibited.

This is pushing teams to find creative solutions to meet demand. Here are 3 ways that teams are forging ahead with data gathering, even during the COVID-19 pandemic:

Automated Data Augmentation

In this research, teams at Google and Waymo present ways to augment point cloud data automatically using evolutionary-based search algorithms. They point to prior research showing the efficacy of manually-designed augmentation methods, but suggest that these are not efficient enough to be practical, especially with point cloud object detection from data sources such as LiDAR.

Many companies and teams possess data gathered before the onset of COVID-19. Their ability to augment this data efficiently and effectively can allow them to proceed with training at times when manual collection of new data is impossible. However, there are a number of challenges. First, if key scenarios or edge cases don’t exist at all in a dataset, they can not be created through augmentation. For instance, if you have thousands of hours of driving data but none of your cameras ever captured a car crash or an active construction site, no level of augmentation will be able to introduce these important events into the data. Second, adopting this tactic is dependent on the ability of teams to adapt these algorithmic methods to their specific use cases, a process which can divert critical R&D resources.

Re-visit, Re-label, Re-use

Unable to collect new data, some companies are trying to extract even more value out of existing data. As this article reveals, this often requires a massive investment of time, either in-house or outsourced, in order to add not annotations or manually uncover new edge cases.

Again, this approach seems most common in the automotive industry, where companies are likely to have enormous amounts of data collected from cameras mounted on vehicles. With no new data, these efforts are necessarily limited. Occurrences that were never recorded or situations that can’t be labeled effectively are simply not accounted for.

Simulated Data

Simulated data generation empowers companies to create wholly new sets of data containing all of the necessary parameters and metadata without a reliance on new data collection efforts. Simulations are already in widespread use in the automotive sector. But, as the above-referenced articles imply, other sectors are lagging behind in their ability to obtain their necessary training data during the COVID-19 pandemic.

In robotics, for instance, teams are less likely to have huge datasets of complex indoor environments (especially with humans in them) that they can augment or re-visit. Additionally, it is likely that automotive companies will be able to get back on the road with cameras well before robotics-focused companies are able to collect new data in homes, factories, hospitals, or retail environments. Fortunately, simulated data relies on just a tiny sample of basis references to generate entire datasets with hundreds of asset classes and full inclusion of edge cases. This makes it a feasible option for many teams, not just those already sitting on huge mountains of data.

COVID-19 has not suddenly created interest in robotic solutions in a range of contexts, but it has certainly accelerated it. And, future events are likely to apply additional pressure in this direction. With this sense of urgency, especially as Corona continues to disrupt data-collection efforts, we expect Simulated Data to provide an increasingly-attractive solution to teams rushing to usher in the next generation of computer-vision powered automated robots.

Read our Survey on Synthetic Data: The Key to Production-Ready AI in 2022