The Biggest News in Computer Vision in 2022

2022 has been an incredibly eventful year for the world of computer vision and synthetic data. Here is a roundup of the most exciting developments from this year.

Generative AI models steal the spotlight

These generative models have impressed the world with their ability to generate high-resolution and high-fidelity images from text prompts. 2022 saw the release of multiple powerful text-to-image models that outperformed their predecessors.

Mid-2022, Open AI released DALL-E 2. It can make realistic edits to existing images, replicate the style of other images, and even expand a single image. Midjourney, another text-to-image model, released its open beta in 2022. Google’s Imagen was also released this year.

Stability AI released its brainchild Stable Diffusion 1.0 in 2022, and even updated it to Stable Diffusion 2.0 in November. Based on a novel latent diffusion model, Stable Diffusion performs competitively with the state-of-the-art for various image generation tasks with a smaller computational footprint. Its developers made the code and model public, a marked departure from its competitors DALL-E 2 and Midjourney.

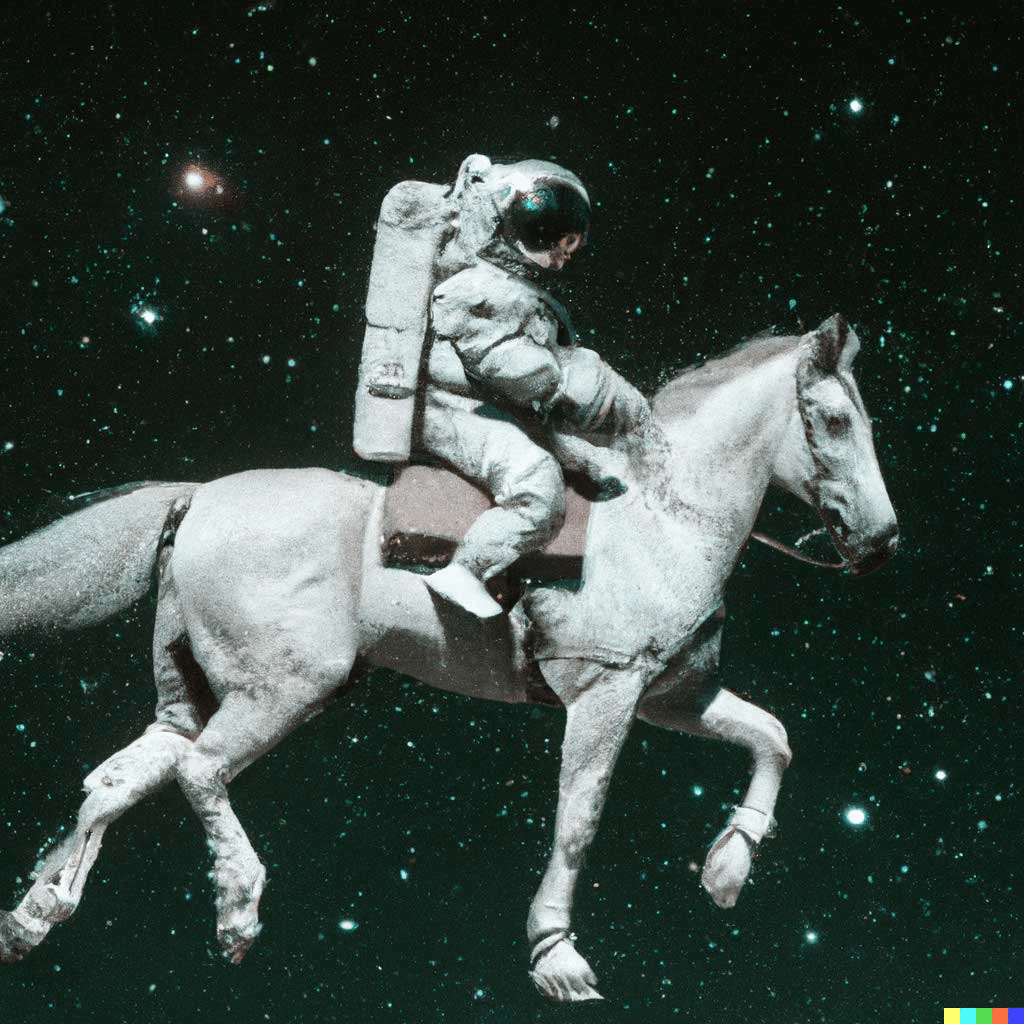

“A photograph of an astronaut riding a horse” by DALL-E 2

“Théâtre D’opéra Spatial”, which was generated by Midjourney, won the grand prize at the Colorado State Fair.

Video-generating AI Makes a Scene

Generating video from text was unthinkable just a few years back. That changed when people witnessed the impressive feats achieved by the text-to-video AI.

The release of Make-A-Video in September 2022 was Meta’s latest venture in the generative AI space. A week later, Google’s Imagen Video boasted its ability to generate videos at 24 frames per second. With a few words or lines, it could generate unique videos filled with vivid scenes and characters. Citing concerns for abuse, Meta and Google have yet to release a public beta of the system.

These synthetic videos are miles away from Hollywood-budget films. But they represent a groundbreaking development and pave the way for future video generative AI.

A teddy bear washing dishes, generated by Imagen (Source)

Start now with our new free trial!

NeRFs grow in maturity and versatility

The advent of Neural Radiance Field (NeRF) in 2020 opened the floodgates for synthesizing novel views of complex scenes. In 2022, researchers made headway in advancing the various aspects of NeRF.

Many papers iterated on the fundamentals of synthesizing views using NeRF. These papers addressed existing gaps of NeRF in handling edge cases. One such paper is NeRF in the Dark, which made use of noisy raw images to generate realistic scenes. Separately, Google’s Mip-NeRF 360 generated unbounded scenes that extend in all directions at any distance, a weakness that plagued the vanilla NeRF.

NeRF in the DARK (Source)

Results from Mip-NERF 360 (Source)

Some other notable mentions include:

- Light Field Neural Rendering combined NeRF with a transformer architecture to generate high-quality renderings

- Plenoxels drastically shortened training times by replacing the traditional MLP with 3D voxel grids.

- BANMo creates 3D models of deformable objects from casual videos using volumetric NERF

New synthetic datasets complement real-world datasets

Real-world datasets are rife with privacy and safety concerns. Dubbed as the silver bullet to these troubles, synthetic datasets are gaining steam. Today, it is common practice to use real-world datasets with synthetic datasets to train deep learning models.

In 2022, we saw the release of several major synthetic image datasets:

- The Real-world 3D object understanding features an impressive catalog of 150,000 3D models of indoor household objects.

- SHIFT is a synthetic dataset aimed at training autonomous vehicles under different weather and lighting conditions

- Digi-Face 1M has 1.2 million face images, making it the largest scale synthetic dataset for face recognition to date.

- ArtiBoost is a hand-object pose estimation dataset featuring diverse poses, object types and camera viewpoints.

DigiFace-1M (Source)

Transformers gain prominence in computer vision modeling

The transformer architecture found tremendous success in natural language processing (NLP) tasks. This year saw researchers applying transformers to vision tasks, giving rise to the vision transformer (ViT).

Here are some highlights:

- Microsoft released the efficient self-supervised vision transformer, which beat existing cutting-edge methods at top-1 ImageNet accuracy.

- By experimenting with the vision transformer against common corruptions, distribution shifts, and adversarial examples, researchers concluded that vision transformers are robust learners.

- Fine-grained visual classification, a task of recognizing objects from subcategories, got a breakthrough with TransFG, a transformer architecture.

Synthetic technology gathers steam

Many synthetic data tasks have advanced in 2022. Here are some notable examples:

- Task2Sim generates synthetic pre-training data that is optimized for specific downstream tasks.

- Apple released GAUDI, a generative model that captures the distributions of complex and realistic 3D scenes

- StyleGan-V holds the promise to generate continuous videos with the price, image quality and perks of StyleGAN2.

These new developments lay the groundwork for many developments to come. There’s no doubt that 2023 will be a year where more exciting technology will emerge!