300W Dataset: Scaling a Face Dataset from 600 to 366K Images

What Is the 300W Dataset?

300-W is an image dataset that focuses on face images. It contains 300 indoor images and 300 outdoor images with human faces, captured in the wild. It covers a variety of identities, facial expressions, lighting conditions, poses, occlusions, and face sizes.

Images were downloaded from google.com using search terms like “party”, “meeting”, “protest”, and “celebrity”. The goal of 300W creators was to create a dataset that tests an algorithm’s availability to analyze faces in unconstrained, real world situations.

Compared to similar datasets, 300W has a higher proportion of partially occluded images, and more unusual expressions such as ‘surprise’ and ‘scream’ (as opposed to the more common ‘neutral’ or ‘smile’). Images were annotated with 68 point markers using a semi-automatic method. The images are between 48 KB and 2 MB in size.

In a significant development, two extended versions of the original 300W dataset were introduced, called 300W-LP and 300W-LPA. What is special about these datasets is that they started from the same 300W images, but used a generative technique to create multiple variations of each face images, with automatically generated annotations.

This made it possible to scale up the dataset from only 600 images in the original dataset to 61K images in 300-LP and 366K images in 300-LPA.

In This Article

The 300-W Challenge

The 300-W Challenge was held in the years 2013 and 2014. It was the first time an event was organized specifically to benchmark the automated facial landmark recognition efforts. It focused on identifying facial landmarks in real-world face image datasets.

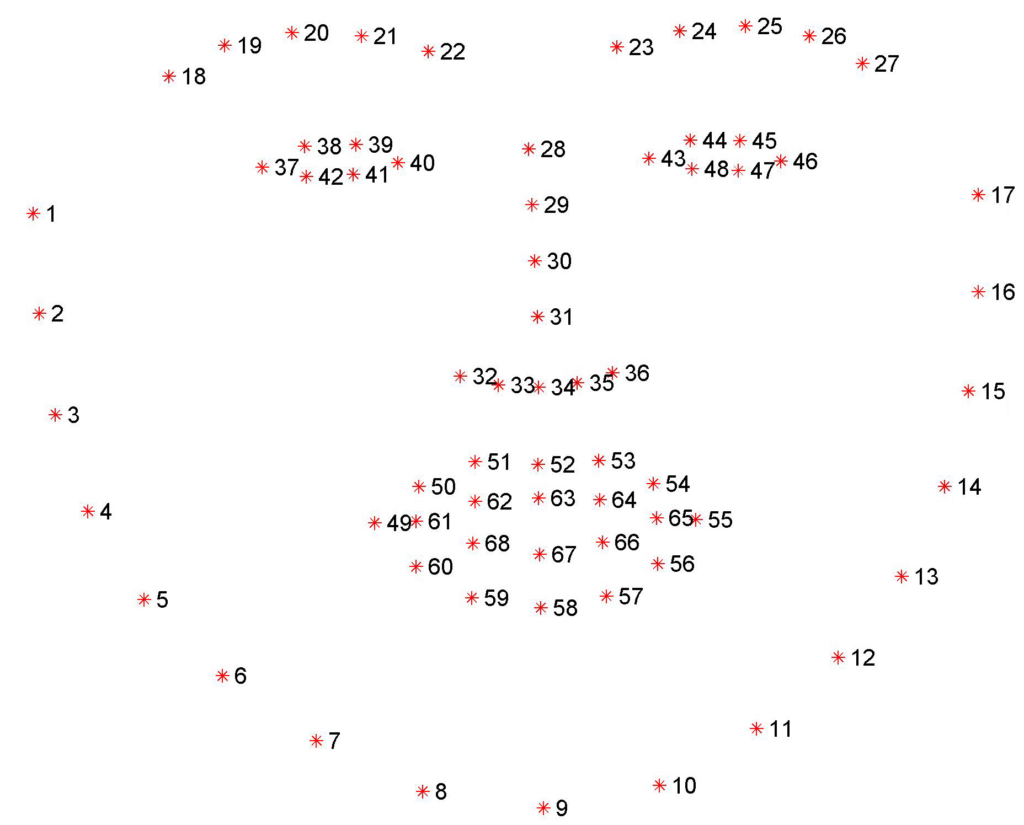

The LFPW, AFW, HELEN, and XM2VTS datasets were re-annotated with the markup shown in the following image. There were also annotations for an additional 135 images of faces with different expressions and poses (the iBUG training set).

Figure 1, Image Source: Imperial College of London

Participants in the 300-W challenge tested their algorithms on the 300-W test dataset. Submissions were evaluated using standard bounding-box initialization when running each trained algorithm on the 300-W test dataset. Performance was evaluated based on the 68-point markup in the above image, and 51 points corresponding to the borderless points.

Winners of the latest 300W competition were:

- J. Deng, et. al, “Multi-view, multi-scale and multi-component cascade shape regression.”

- H. Fan, et. al, “Approaching human level facial landmark localization by deep learning.”

300W Extended Versions

300VW Dataset

Although there are comprehensive benchmarks for locating facial landmarks in still images, efforts to benchmark facial landmarks in video are very limited. 300 Videos in the Wild (300-VW) is a dataset for evaluating landmark tracking algorithms on videos containing faces. It contains 114 videos.

Authors of the dataset collected videos of faces recorded in the wild. Each video is approximately 1 minute (25-30 fps) in length. All frames are annotated with the same 68 facial landmarks used in the 300W dataset.

300W-LP Dataset (61K Images)

The 300W-LP (Large Pose) dataset is based on the original 300W images, creating multiple variations with different poses, using several algorithmic techniques.

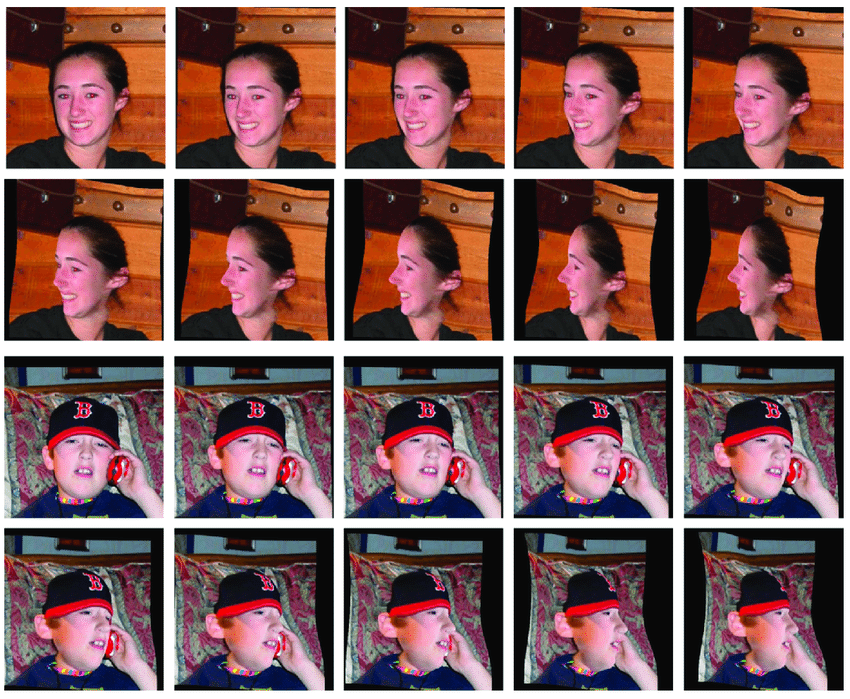

Source: Research Gate

300W-LP extends 300W to multiple databases based on alignment techniques including AFW, LFPW, HELEN, IBUG, and XM2VTS. This results in 61,225 samples derived from the original 300W images. All the images are marked with the same 68 landmarks.

This dataset can be used as a training set for computer vision tasks such as recognizing facial attributes and locating landmarks (or parts of a face).

300W-LPA Dataset (366K Images)

The 300W-LPA (Large Pose Augmented) dataset builds on 300W-LP, augmenting it with more data by rotating each image in pitch, 5 degrees at a time, up to 25 degrees in both directions. The dataset contains 366,564 photos of 59,439 individuals.

The following image illustrates the difference between the original 300W dataset and 300W-LP/LPA.

The Technology Behind 300W-LP/LPA Datasets: Multiplying Face Datasets

A unique feature of the 300W-LP/LPA datasets is that they use automation to create multiple versions of an original, annotated face image. This addresses major challenges of face datasets:

- Small number of annotated data samples

- Low accuracy of human annotations

- Cost and difficulty of scaling the dataset by adding more annotated images

In a paper by Sagonas, et. al (2013), the authors suggest using generative models to create new instances of existing face images. These models can take an image and create a different view—for example, take an image of a face looking sideways and generate a frontal image. This can create multiple versions of the same face image, rotated or tilted in various directions. It is then possible to automatically generate accurate image annotations for the new images.

One approach to perform this type of transformation is active appearance models (AAM). However, these models are difficult to fit and do not generalize well to unseen images. The authors showed that active orientation models (AOMs) can provide much better performance. They proposed a tool based on AOM which performs semi-automatic annotation for variations of existing face images. This tool was the basis for the extended LP/LPA datasets.