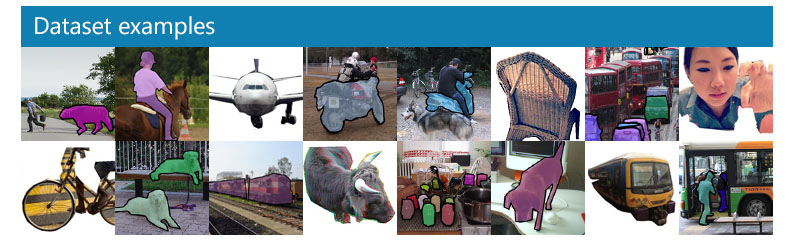

MS COCO Dataset: Using it in Your Computer Vision Projects

What is MS COCO?

MS COCO (Microsoft Common Objects in Context) is a large-scale image dataset containing 328,000 images of everyday objects and humans. The dataset contains annotations you can use to train machine learning models to recognize, label, and describe objects.

MC COCO provides the following types of annotations:

- Object detection—coordinates of bounding boxes and full segmentation masks for 80 categories of objects

- Captioning—natural language descriptions of each image.

- Keypoints—the dataset has more than 200,000 images containing over 250,000 humans, labeled with keypoints such as right eye, nose, left hip.

- “Stuff image” segmentation—pixel maps of 91 categories of “stuff”—amorphous background regions like walls, sky, or grass.

- Panoptic—full scene segmentation, indicating objects in the image according to 80 categories of “things” (cat, pen, fridge, etc.) and 91 “stuff” categories (road, sky, water, etc.).

- Dense pose—the dataset has more than 39,000 images containing over 56,000 humans, with every labeled person annotated with an instance id and a mapping between pixels representing that person’s body and a template 3D model.

In This Article

How to Use the COCO Dataset

The MS COCO dataset is maintained by a team of contributors, and sponsored by Microsoft, Facebook, and other organizations. It is provided under a Creative Commons Attribution 4.0 License. According to this license you are allowed to:

- Distribute the dataset

- Tweak or modify images

- Remix images with each other or external images

- Use it in any type of work, including commercial projects, crediting the original creators

MS COCO is composed of several datasets created in different years—each one focuses on a different computer vision task, such as:

- Object detection

- Keypoint tracking

- Image captioning

- “Stuff” detection

You can download these datasets from the official project website. The project team recommends:

- Downloading the full dataset using the gsutil rsync utility, provided free by Google Cloud.

- Using the COCO API, provided in Lua, MATLAB, and Python, to set up the dataset.

- Using FiftyOne, an open source tool, to work with the dataset for computer vision projects.

MS COCO Dataset Use Cases

There are two primary use cases for the MS COCO dataset:

- Training computer vision models—it is not sufficient to train computer vision algorithms on professionally shot images, because they do not represent real world conditions. The MS COCO dataset provides a wide range of realistic images, showing disorganized scenes with various backgrounds, overlapping objects, etc. This makes it possible to train models on objects and people in realistic settings.

- Comparing AI models—in order to accurately compare the performance of multiple computer vision datasets, these models must be trained on a large-scale, standardized dataset. COCO is exactly that dataset, providing standardized, accurately annotated images, which create a level playing field for algorithms.

COCO Dataset Format

The COCO dataset uses a JSON format that provides information about each dataset and all the images within it—including image licenses, categories used to classify objects in the images, raw image data such as pixel size, and the all-important annotations of objects in the images.

Dataset Info

The info section provides general information about each dataset. There are several datasets provided as part of the COCO project. Below is an example of dataset information.

“info”: {

“year”: 2022,

“version”: 1.3,

“description:” “Vehicles dataset”,

“contributor”: “John Smith”,

“url”: “http://vehicles-dataset.org”,

“date_created”: “2022/02/01”

}

Licenses

The licenses section provides details about licenses of images included in the dataset, so you can understand how you are allowed to use them in your work. Below is an example of license info.

“licenses”: [{

“id”: 1,

“name”: “Free license”,

“url:” “http://vehicles-dataset.org”

}]

Categories

The categories section shows main categories (called supercategories) and sub-categories (called categories) into which images were classified. This can allow you to select a subset of the data that is relevant for your computer vision project. Below is an example of category data.

“categories”: [

{“id”: 1,

“name”: ”car”,

“supercategory”: “road vehicles”,

“isthing”: 1,

“color”: [1,0,0]},

{“id”: 2,

“name”: ”boat”,

“supercategory”: “sea vehicles”,

“isthing”: 1,

“color”: [2,0,0]}

]

Images

The images section provides raw image information—such as the filename and image size—not including image annotations. Below is an example of image data.

“image”: [{

“id”: 1342334,

“width”: 640,

“height”: 640,

“file_name: “ford-t.jpg”,

“license”: 1,

“date_captured”: “2022-02-01 15:13”

}]

Generate Synthetic Data with Our New Free Trial. Start now!

Annotations

The annotations section provides a list of all object annotations for every image in the dataset. The iscrowd field indicates if there are several objects of the same type included in the annotation. The bbox field provides the coordinates of the bounding box. The category_id field indicates to which category this object was classified.

Below are two examples of annotation data. To learn more see the official documentation for the COCO data format.

“annotations”: [{

”segmentation”:

{

“counts”: [34, 55, 10, 71]

“size”: [240, 480]

},

“area”: 600.4,

“iscrowd”: 1,

“Image_id:” 122214,

“bbox”: [473.05, 395.45, 38.65, 28.92],

“category_id”: 15,

“id”: 934

}]

“annotations”: [{

”segmentation”: [[34, 55, 10, 71, 76, 23, 98, 43, 11, 8]],

“area”: 600.4,

“iscrowd”: 1,

“Image_id:” 122214,

“bbox”: [473.05, 395.45, 38.65, 28.92],

“category_id”: 15,

“id”: 934

}]

Bias in the COCO Dataset

Multiple studies have been conducted on biases in image datasets, including the MS COCO dataset which is very widely used in computer vision models. In particular, research by [A, B, and C] showed that the MS COCO dataset has several significant biases:

- More light-skinned individuals—the dataset has 7.5x more images of light-skinned people compared to dark-skinned people, 2x more males than females, and even less representation of dark-skinned females.

- Racial terms in image captions—many of the image captions contain racial terms or even racial slurs. This could cause computer vision models to describe individuals in a socially inappropriate way.

- Model performance favors light-skinned individuals—several studies cited by the researchers have shown that image captioning systems built based on the COCO dataset have higher accuracy for light-skinned individuals in tasks like facial recognition and pedestrian detection.

- Racial bias of image context—lighter skinned people tend to appear indoor with furniture showing in the image, while dark-skinned people tend to appear outdoors and have vehicles showing in the image.

The researchers explain that these biases make their way into datasets like MS COCO through the initial selection of images, manual captions written by humans (who have their own biases), and automated captions, which are inspired by the manual captions.

Biases in MS COCO and similar datasets could have a serious impact on society, because of the prevalence of computer vision applications:

- Automated image tagging and image search applications can have racial and gender bias.

- Computer vision models can have different accuracy rates for people of different races or genders.

While some of these biases stem from the way datasets like MS COCO were assembled, some of them are a result of real-life disparities. For example, if a certain combination of race and gender is a small part of the population, naturally, only a few images of that type of person will appear in the dataset, making it difficult for computer vision algorithms to recognize them.