13 Computer Vision Algorithms You Should Know About

In This Article

What Is a Computer Vision Algorithm?

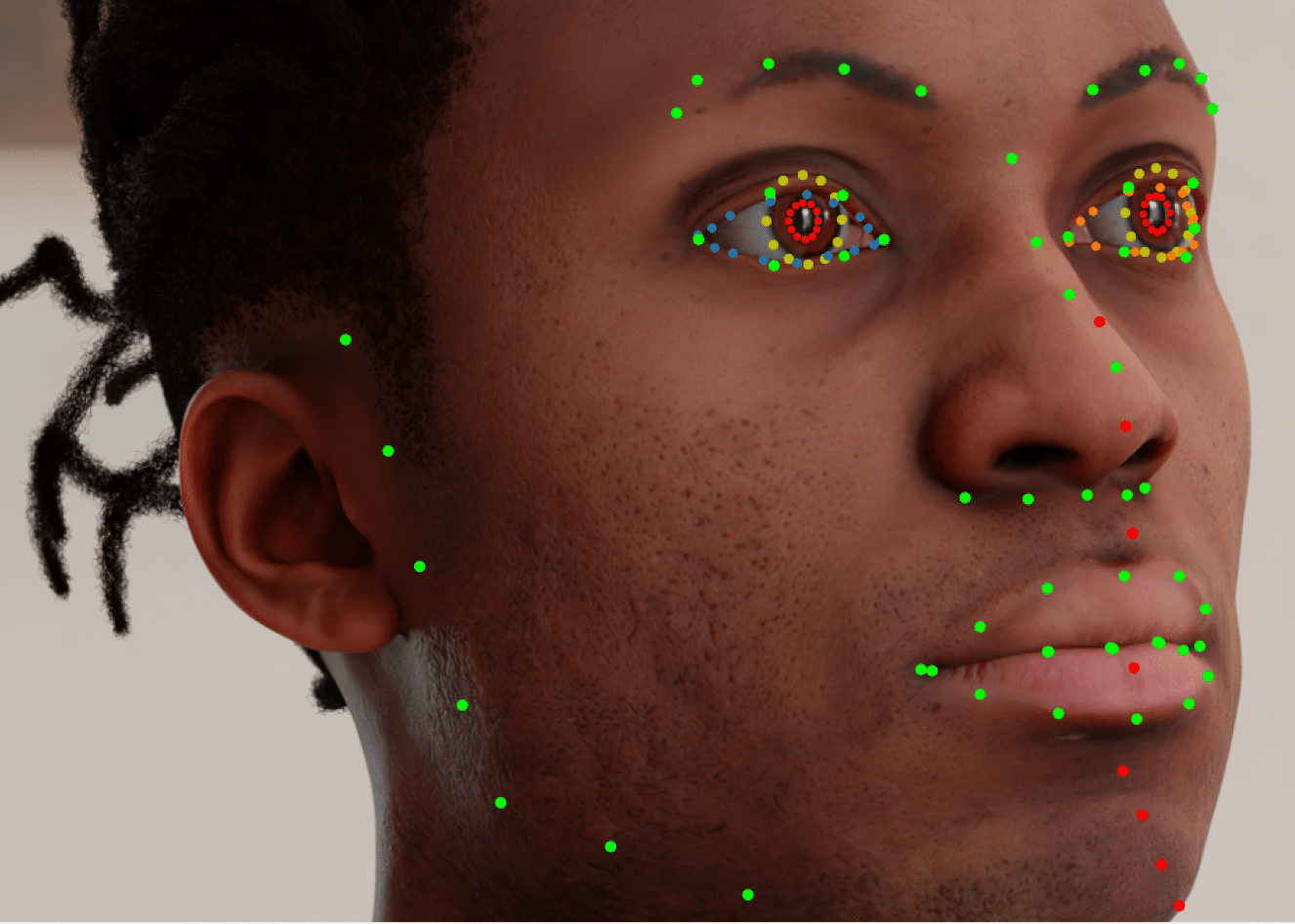

A computer vision algorithm is a set of instructions that a computer uses to interpret and understand visual data from the world around it. These algorithms are used to analyze and process images and videos, and can be used for a wide range of applications, such as object recognition, image classification, facial recognition, and video tracking.

The Evolution of Computer Vision Algorithms

The field of computer vision has evolved significantly over the years, with new algorithms and techniques being developed to improve the accuracy and efficiency of image and video analysis. Some key milestones in the evolution of computer vision algorithms include:

- Early computer vision: In the 1960s and 1970s, the first computer vision algorithms were developed, primarily focusing on image processing and pattern recognition techniques. These early algorithms were limited in their ability to recognize and understand complex images and scenes.

- Machine learning: In the 1980s and 1990s, machine learning techniques began to be applied to computer vision, allowing algorithms to learn from examples and improve their performance over time. This led to the development of algorithms for tasks such as object recognition and image classification.

- Deep learning: In the 2000s, deep learning techniques emerged, which use neural networks to learn patterns in images and videos. These algorithms have significantly improved the accuracy of computer vision tasks such as object detection and facial recognition.

- Real-time systems: In recent years, advances in hardware and software have allowed computer vision algorithms to be run in real-time, making them suitable for use in a wide range of applications such as self-driving cars, drones, and robotics.

With the advancements in computer vision, we are seeing more and more real-world applications, such as medical imaging, retail, security, and entertainment. Computer vision algorithms are becoming better at understanding the context of an image and performing more complex tasks such as scene understanding, semantic segmentation and action recognition.

Object Detection and Image Segmentation Algorithms

These algorithms are used for applications such as face recognition, where the computer vision model identifies a specific object.

1. SIFT

The Scale-Invariant Feature Transform (SIFT) algorithm is a computer vision algorithm used for identifying and matching local features, such as corners or blobs, in images. It was first described in a paper by David Lowe in 1999. The SIFT algorithm is invariant to image scale and rotation.

SIFT is widely used in image matching, object recognition, and image registration applications. However, its usage is limited because of its patent by the University of British Columbia.

2. SURF

The Speeded Up Robust Features (SURF) algorithm is a feature detection and description method for images. It is a robust and fast algorithm that is often used in computer vision applications, such as object recognition and image registration.

SURF is considered to be a “speeded up” version of the Scale-Invariant Feature Transform (SIFT) algorithm. Its computational efficiency makes it more useful than SIFT for real-time applications.

3. Viola-Jones

Viola-Jones is a computer vision algorithm for object detection, specifically for detecting faces in images. It was developed by Paul Viola and Michael Jones in 2001. The algorithm uses a technique called “integral image” that allows for fast computation of Haar features, which are used to match features of typical human faces.

The algorithm also uses “cascading classifiers”, which is a group of Haar-like features, to make predictions about whether a face is present in an image. The Viola-Jones algorithm is particularly efficient and is widely used in many applications such as security systems, photo tagging where computational power is limited.

4. Eigenfaces

Eigenfaces is a computer vision algorithm that was developed in the early 1990s by researchers at MIT to recognize faces in images. The algorithm is based on the concept of eigenvectors.

The algorithm first performs Principle Component Analysis (PCA) on a large set of face images, which are then used as a set of “eigenfaces”. The basic idea is that any face can be represented as a linear combination of these eigenfaces, and the coefficients of the linear combination can be used as a unique feature vector for the face.

5. Histogram of Oriented Gradients (HOG)

The histogram of oriented gradients (HOG) is a feature descriptor used in computer vision for object detection. It is used to represent the shape of an object by encoding the distribution of intensity gradients or edge directions within an image.

The basic idea behind HOG is to divide an image into small connected regions called cells, typically 8×8 pixels, and then compute a histogram of gradient orientations for each cell. The histograms for all the cells in the image are then concatenated to create a feature vector for the entire image. This feature vector captures information about the object’s shape and texture, which can then be used as input to a machine learning algorithm for object detection.

6. YOLO

YOLO (You Only Look Once) is a computer vision algorithm used for object detection in images and videos. It can process images and make predictions about the objects within them in a single pass, rather than requiring multiple passes through the image, as is the case with other object detection algorithms.

YOLO uses a convolutional neural network (CNN) to analyze the image and make predictions about the objects within it. It divides the image into a grid of cells. If the center of an object falls into a grid cell, then that grid cell is responsible for detecting that object. Each grid cell predicts a fixed number of bounding boxes, and produces confidence scores for those boxes. This allows YOLO to make predictions about multiple objects within the same image.

7. ResNet

ResNet (short for Residual Network) is a deep convolutional neural network architecture that was developed by researchers at Microsoft in 2015. It is known for its performance on image classification and object detection tasks.

The key innovation in ResNet is the use of “residual connections” between layers. This enables the network to better handle the vanishing gradient problem, which is a common issue in very deep neural networks.

8. Graph Cut Optimization

Graph cut algorithms are most commonly used in image segmentation to separate an image into multiple regions or segments based on color or texture.

First, a network flow graph is built based on the input image. The graph cut algorithm is a method for partitioning a graph into two or more sets of vertices (also called nodes). The goal is to minimize the number of edges that need to be cut, while ensuring that the vertices in each subset satisfy certain conditions.

9. Adaptive Image Thresholding

Adaptive thresholding can segment an image by setting all pixels whose intensity values are above a threshold to a foreground value and all the remaining pixels to a background value. The basic idea of adaptive thresholding is to use different threshold values for different regions of the image, rather than using a global threshold value for the entire image. This allows for the algorithm to take into account variations in the image’s lighting and texture and produce a more accurate binary representation of the image.

Motion Estimation Algorithms

These algorithms track the movements of an object in a video or image sequence.

10. Lucas-Kanade

The Lucas-Kanade algorithm is a widely used method for optical flow estimation, which is the process of finding pixel-wise motions between consecutive images. The algorithm is based on the assumption that the optical flow in the local neighborhood of the pixels in an image is constant.. It uses the brightness constancy assumption, meaning that the pixels in the image can move around, but their brightness cannot change.

The Lucas-Kanade algorithm makes an estimate of the displacement of a neighborhood by looking at changes in pixel intensity. Such changes can be explained by the known intensity gradients of the image in that neighborhood. Lucas-Kanade uses least-square estimation to find the optical flow of all the pixels over the neighborhood.

Link to paper

11. Kalman Filter

The Kalman filter is a mathematical algorithm that is commonly used to estimate the state of a system based on a series of noisy measurements. It is a recursive algorithm that uses a combination of predictions and measurements to estimate the state of the system at any point in time. These predictions are then compared to new measurements, and the algorithm uses a process called “update” to refine its estimates.

Kalman Filters are commonly used in computer vision applications, in particular for object tracking tasks. Object tracking algorithms draw a bounding box across specific objects in an image, and attempt to accurately redraw this bounding box in subsequent frames, as the object moves. Kalman Filters can be used to predict the current and future positions of an object, even when it is hidden by obstacles (known as occlusion).

12. Mean Shift Algorithm

The mean shift algorithm is a non-parametric, density-based clustering method for finding the regions with high density modes (i.e., high density) in a dataset. Each pixel is first assigned an initial mean, which is itself. The algorithm iteratively places a window around the initial mean, and calculates the new mean of all the points within that window. This process repeats until the position of the mean no longer changes significantly.The mean shift algorithm can also be extended to classify the data points into different clusters based on their final positions.

Link to paper

Image Reconstruction

Image reconstruction algorithms are used to convert images to different styles, fill in missing elements, enhance the resolution of lower-quality images, or generate new images based on similar inputs. Below we describe a popular image reconstruction algorithm, the autoencoder.

13. Autoencoders

Autoencoders are a type of artificial neural network used for unsupervised learning. They consist of an encoder and a decoder, where the encoder maps the input data to a lower-dimensional representation (also known as the latent space or bottleneck), and the decoder maps the lower-dimensional representation back to an output.

The main goal of autoencoders is to learn a compact representation of the data, which can then be used for various tasks, such as dimensionality reduction, anomaly detection, and generating new data samples. Autoencoders can be trained using various loss functions. An example is reconstruction loss, which measures the difference between the input and reconstructed output.